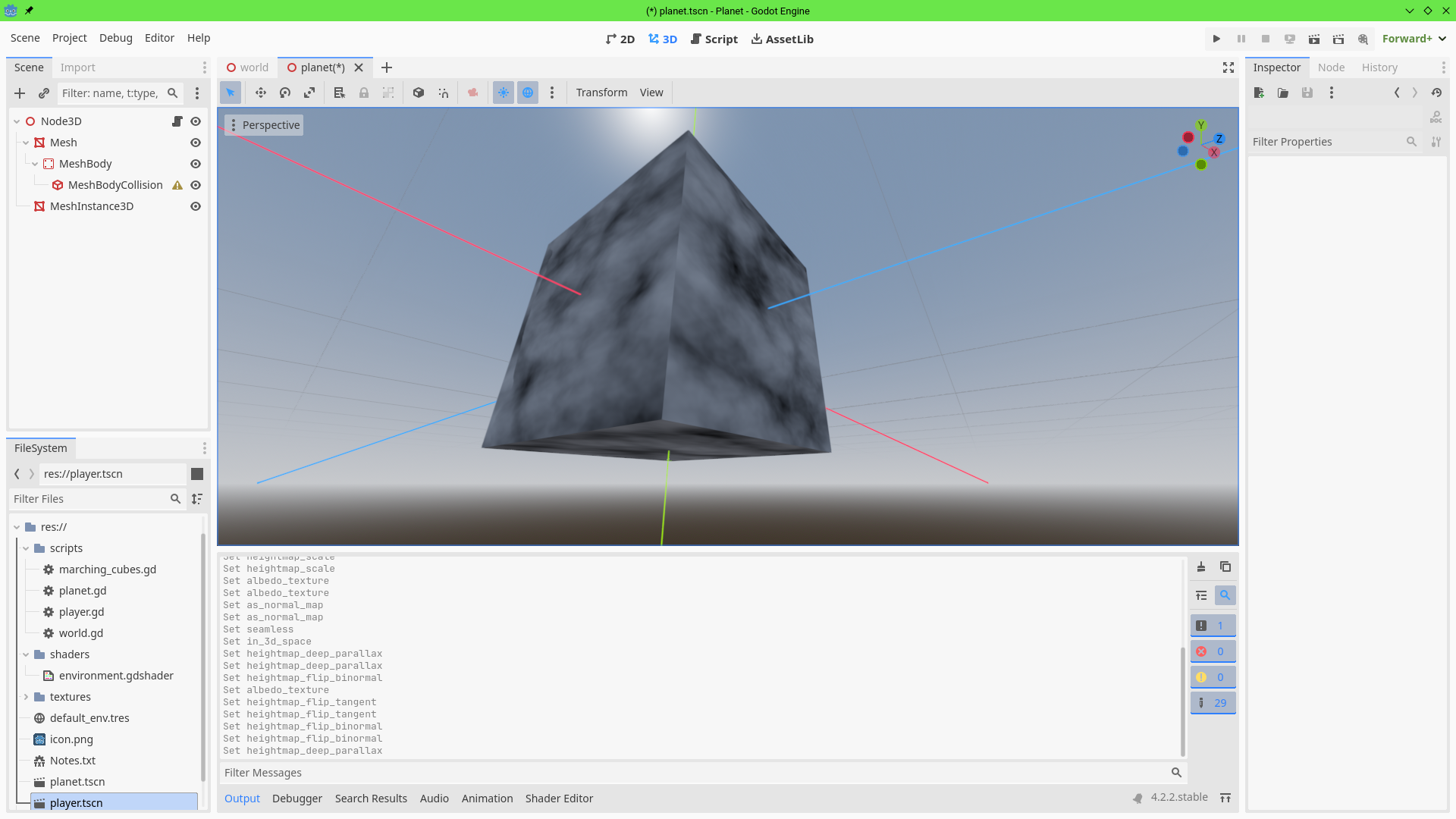

There's often talk of how nice it would be to have builtin tesselation support, which I fully agree with but at the same time I think an even more powerful alternative exists: Proper parallax that can truly create the illusion of displacement. While the default StandardMaterial3D shader has a Height section where a heightmap is used to indicate depth, the effect remains unrealistic even when using the Deep Parallax setting (Parallax Occlusion Mapping). The issue isn't even how bad the distortion can look sometimes, but rather how the effect is limited by the geometry of the surface it's on: If this hard limitation could be lifted efficiently we may achieve an incredible level of detail that trumps tesselation by using a ridiculously low number of polygons on the underlying mesh!

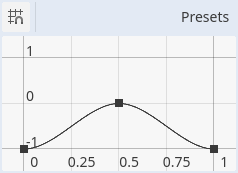

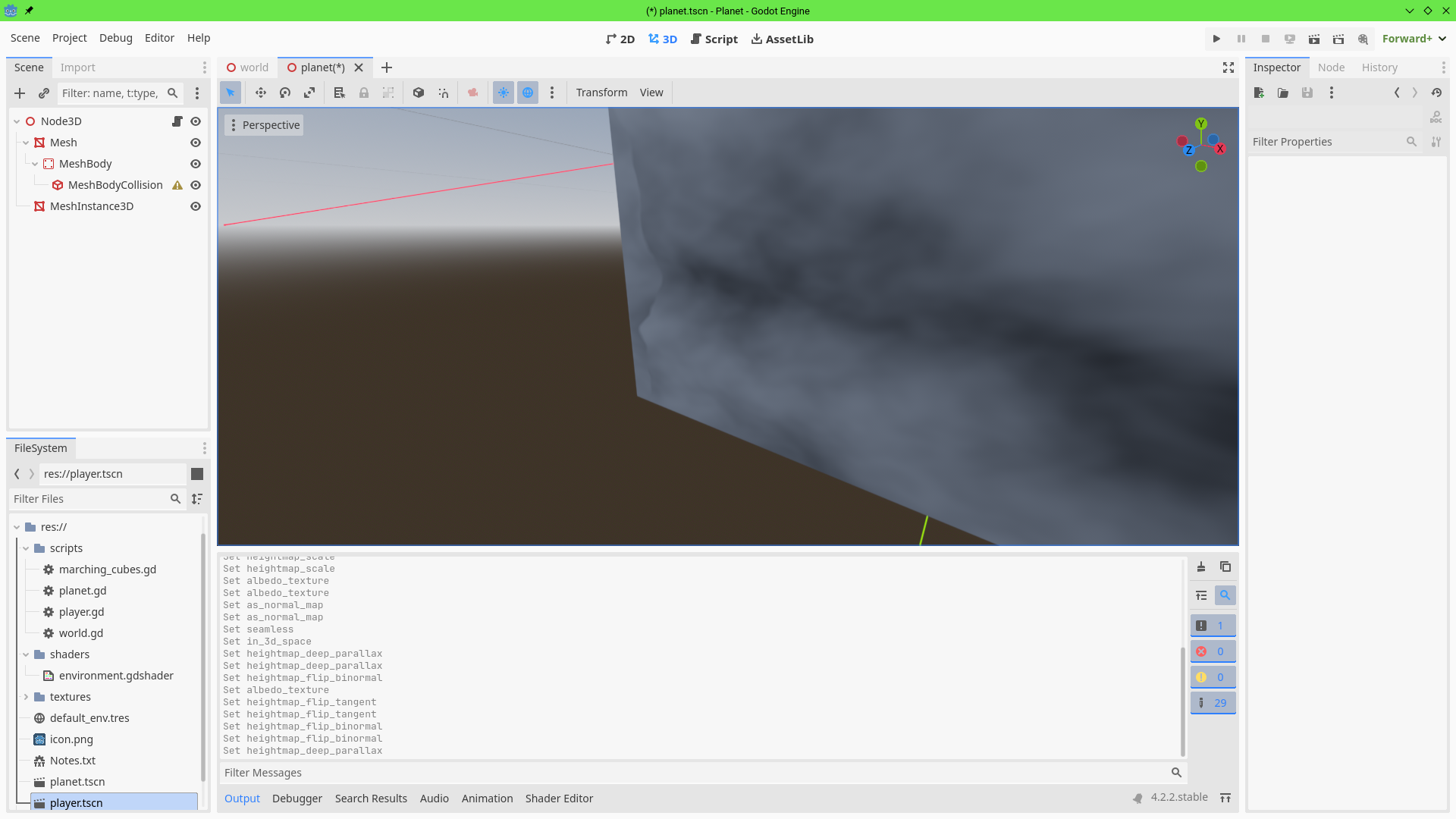

As can be seen in the images, the problem by far is that parallax can't pop out of the surface it's on: If you look at the edge of the cube you can still see a straight line... which isn't even limited to just those edges where the skybox is behind the cube, even interior edges connecting two faces from the camera's perspective can reveal the artificial line. We want high pixels to actually be rendered above the geometry surface and / or low pixels are to be rendered below it.

The problem is I'm not sure if either OpenGL or Vulkan shaders allow any way to do this: We're asking a pixel that doesn't initially overlap a triangle to behave as if it touched that triangle, shaders rely on a pixel touching their geometry for that shader to even be computed. At first this seems solvable by growing or shrinking the geometry in vertex shaders, if the faces are expanded to encompass the highest point on the parallax map lower points are then enclosed within the surface. But then what do you do when you detect a hole below an edge through which a pixel should proceed? The logical answer seems like using the alpha channel to mark that pixel as transparent so it can see through the surface... but is alpha is calculated before shaders and likely can't be edited retroactively: Every triangle would need to be rendered to a different pass so if we discard a pixel it may proceed to check a surface with a lower Z index than itself, which would likely butcher the renderer and GPU. The only solution seems like a mixture of material shader with post-process shader, where we check all triangles the pixel could touch apply the shrinkage / inflation of every pixel in its heightmap and if there's a hit artificially deviate the pixel to act like it's at a different position on the screen relative to that triangle.

I'm curious if any solution to this conundrum exists: Can shaders allow the parallax effect to pop out of the geometry the shader is on? So far not even a parallax shader for UE5 suggests this could be possible, they managed to achieve a very impressive effect but it's still bound to the geometry and you see a perfectly round sphere if you look at the edges. If there was some magic to get this solved, we could attain effects that surpass tesselation for far less resources... just add a few other needed effects like self-shadowing which someone seems to have already done for Godot 3 already!

. It still requires mesh pre-processing to displace the additional volume, as well as ray marching through it.

. It still requires mesh pre-processing to displace the additional volume, as well as ray marching through it.