How to manipulate actual vertex position in fragment shader

Sorry that it took so long, I did simple not find the time to reply.

I don't want to modify the vertices, I want to modify the fragments actual 3D position or displace the pixels like @award wrote.

Let me explain once again what I'm basically doing:

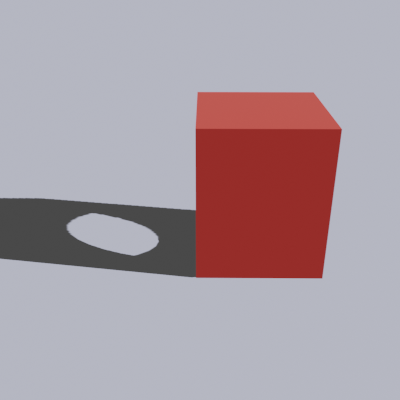

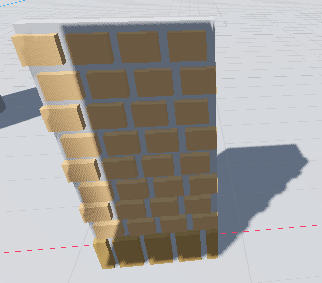

Import a 3D texture where each data point is the color of a voxel (or empty, when alpha is zero) like this one

Render a box in the size of the voxel model (like 20x40x10) with flipped faces and the raymarching shader applied to it

The vertex shader just transforms the vertices of the box

The fragment shader will trace a ray from the camera position in the direction of the 3D pixel coordinate

If it hits a voxel along the rays path, that is the actual position, depth, color and normal of the pixel.

If no voxel is hit, discard that fragment.Use a depth prepass for depth testing

Use the voxel position instead of the vertex position from the shader to calculate shadows, lights etc.

So, I've managed to get everything working except the shadows because I'm not able to tell the final shader to use my displaced position from the raymarching algorithm.

I've extracted the final shader via RenderDoc and it looks like it should in theory be possible:

void fragment_shader(SceneData scene_data)

{

uint instance_index = instance_index_interp;

vec3 vertex = vertex_interp;

vec3 eye_offset = vec3(0.0);

vec3 view = -normalize(vertex_interp);

vec3 albedo = vec3(1.0);

// ... more variables

// ... source from gdshader file

albedo = m_albedo;

normal = m_normal;

gl_FragDepth = m_depth;

// and if here I could do:

vertex = worldPos;

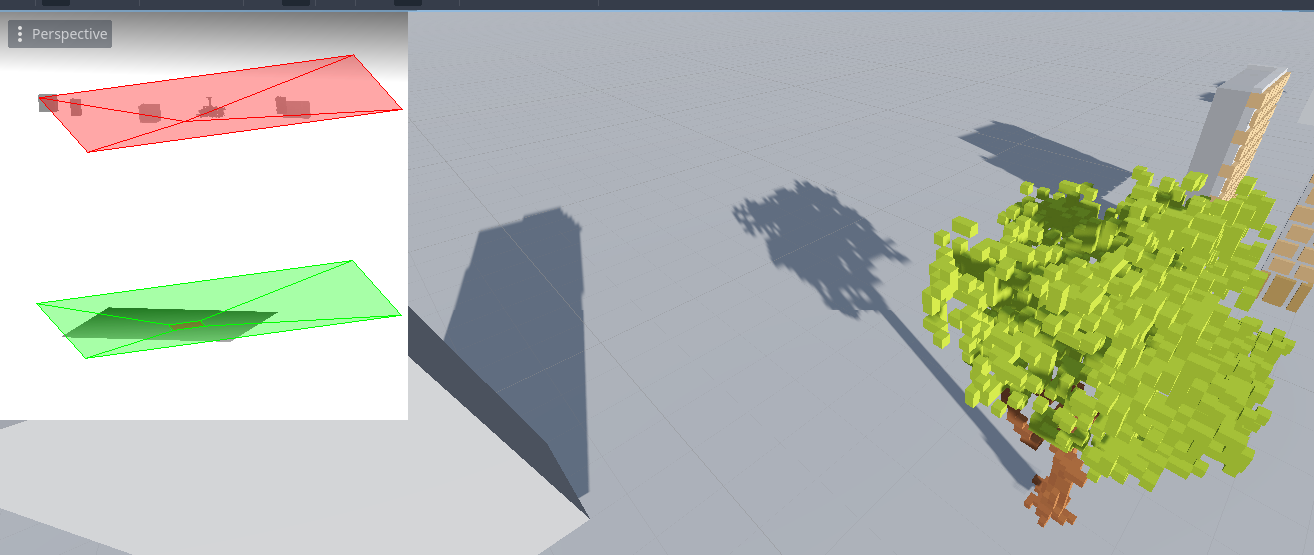

view = -normalize(vertex);But without this, the shadow will be applied to the box just like this:

So I hope this makes is more clear now

Also, here is a very interesting presentation of how the game Teardown does it:

Because VERTEX is being replaced with the variable vertex and it is simply named "vertex" like you can see in the extracted shader code: vec3 vertex = vertex_interp;

VERTEX is a placeholder in a gdshader and will be replaced with "vertex" when transpiling from godots shader language to actual glsl.

And vertex is a variable of type vec3, by default assigned to vertex_interp.

So in my opinion the name is a little misleading, using something like position would be better in terms of fragment shader.

However, that is not important at all as my shader needs to modify this variable but because it's defined as a constant in gdshader language, one just can't write to it without getting a compile (transpile) error while it should be possible to do.

I've already forked the repo and playing around with it because, from looking at "scene_forward_clustered.glsl", it should be fairly straight forward to make it overridable in a gdshader.

Excerpt from "scene_forward_clustered.glsl":

void fragment_shader(in SceneData scene_data) {

uint instance_index = instance_index_interp;

//lay out everything, whatever is unused is optimized away anyway

vec3 vertex = vertex_interp;From "shader_types.h":

shader_modes[RS::SHADER_SPATIAL].functions["fragment"].built_ins["VERTEX"] = constt(ShaderLanguage::TYPE_VEC3);

sader_modes[RS::SHADER_SPATIAL].functions["fragment"].built_ins["VIEW"] = constt(ShaderLanguage::TYPE_VEC3;From "scene_shader_forward_clustered.cpp":

actions.renames["VERTEX"] = "vertex";

actions.renames["VIEW"] = "view";So, in short, "VERTEX" directly translated to "vertex".

It's just a misleading variable name and should not be marked as const rather than a parameter so fragment shaders can actually manipulate the interpolated fragment position to archieve certain effects.

And I think I've given the answer to my question myself now, as I looked at the code and simply do not see any way of archieving this except forking the project and either use my customized version or create a PR to godot and hopefully get it approved.

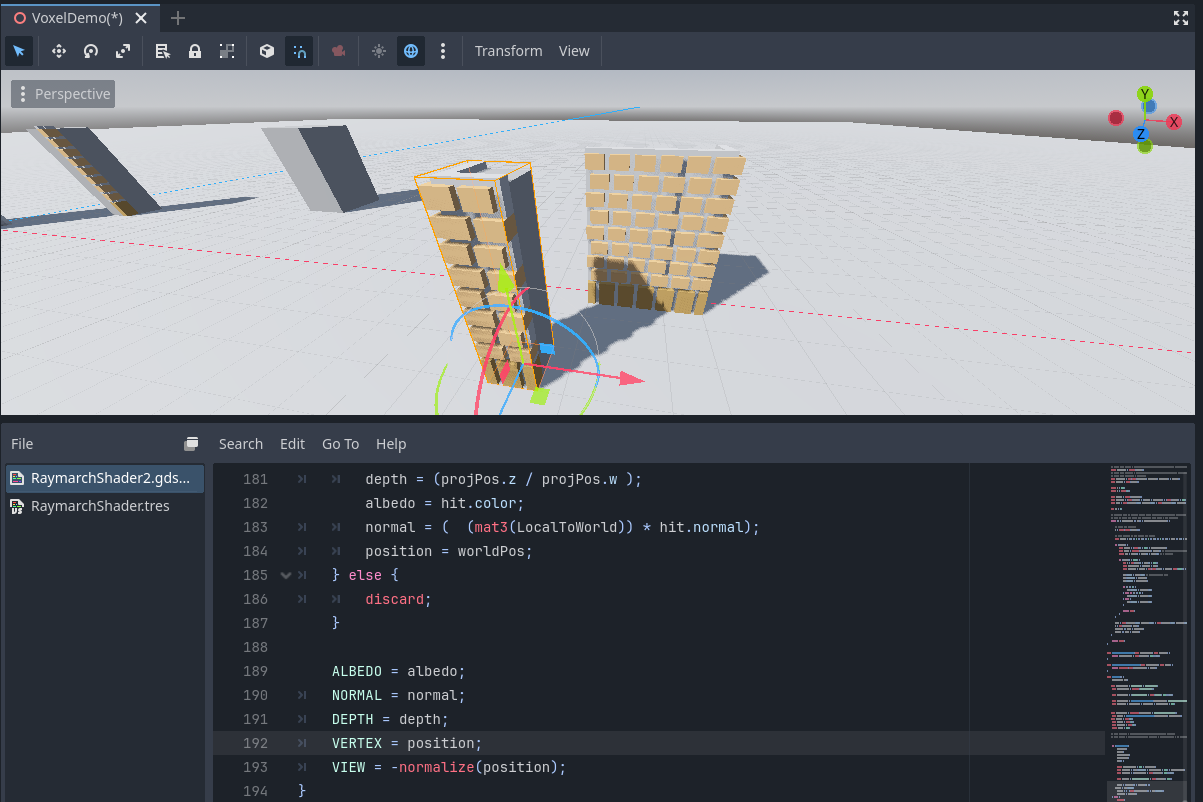

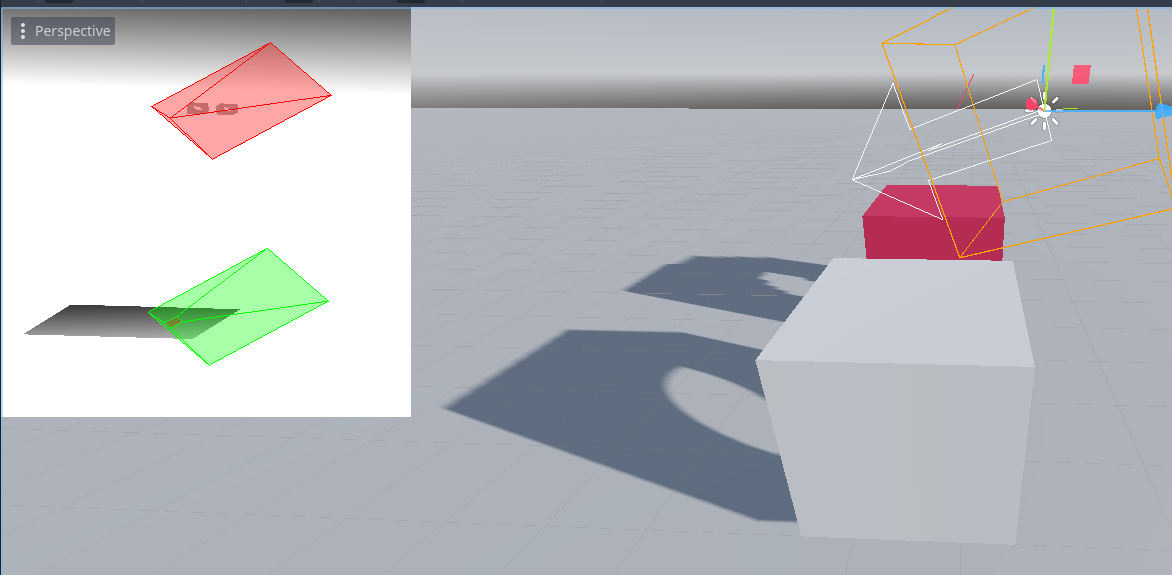

Here is a version with "constt(...)" removed from the two lines which shows correct shadows:

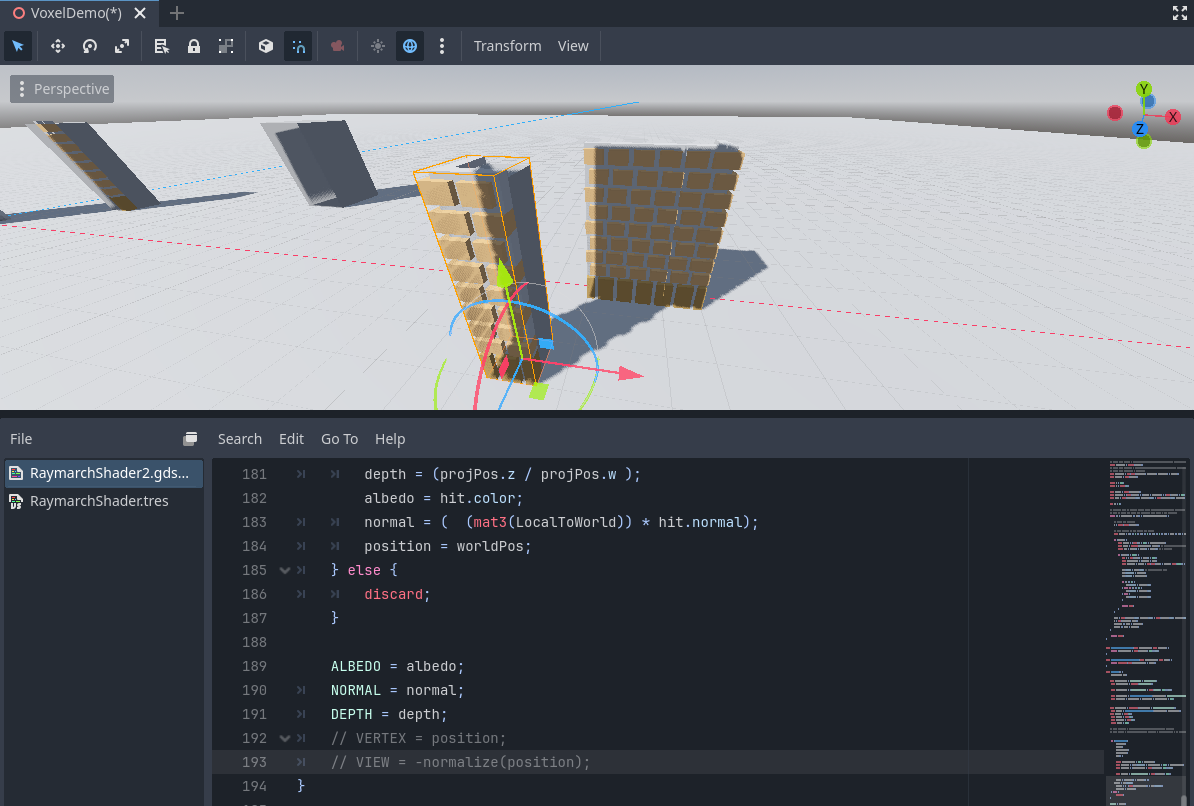

And this one is without setting VERTEX and VIEW:

- Edited

lunatix I think you're misunderstanding how VERTEX works in Godot's shading language. Although it has the same name, it's not the same thing in vertex() function and in fragment() function.

In vertex() it can be read from and written to. In fragment() you can only read it. Its initial input value you get in the vertex() function is vertex position in object space. You can choose to alter it or not.

Between the execution of your vertex() and fragment() functions, Godot will transform it from object space to camera space. It will also interpolate this value for each pixel, between three vertices that comprise currently rasterized triangle. It makes no sense to modify this value in fragment(). It's the result of interpolation of values that fragment shader can't even read, let alone alter.

You also can't affect the position of a pixel. Shaders simply don't operate this way. The pixel is always fixed and the fragment() function is executed in isolation per each fixed pixel, with no knowledge whatsoever of any other pixel.

In pre GLSL 1.5 terminology, VERTEX is a varying, or in modern terminology it's out in vertex shader and in in fragment shader. And the name is completely appropriate.

No, I don't misunderstand how this works and how a vertex is interpolated from vertex to fragment shader but still a good explanation.

But I finally got what you actually trying to tell me and I think we are talking past each other so let me clearify some things:

I know that I can't alter the value of a varying sent (and interpolated) from vertex to fragment shader.

I also know that you can't modify the position of a fragment.

But I can interpret a specific fragment how I like, for example, manipulate it's color, lighting, shadow etc.

And that's exactly what I want to achieve, I want to tell the code being executed after my custom shader what position it should use for the shadow calculations.

I don't want to modify the vertex varying, I'm just searching a way to tell the shader what my raymarching algrithm calculated.

So what I basically want is a POSITION parameter which can be read and written to, like this:

// Code before custom shader function

vec3 vertex = vertex_interp;

vec3 view = -normalize(vertex);

#if POSITION_USED

vec3 position = vertex_interp;

#endif

// custom shader function

vec3 displacedPos = raymarch(...);

POSITION = displacedPos;

// Code after custom shader:

#if POSITION_USED

view = -normalize(position);

#endif

// shadow calculation

float shadow = 1.0;

if (directional_lights.data[i].shadow_opacity > 0.001) {

float depth_z = -position.z; // <-- use position here instead of vertex

vec3 light_dir = directional_lights.data[i].direction;

// this also looks fishy as the fragment shader allows to write to NORMAL but uses normal_interp here

vec3 base_normal_bias = normalize(normal_interp) * (1.0 - max(0.0, dot(light_dir, -normalize(normal_interp))));I hope I made it crystal clear now what I want to achieve and what my problem actually is.

I'm not good at explaining things in an academic way, I'm better at practially showing what I want to do via code and images, so that may be the reason why my intentions where not good to understand.

P.S.: VERTEX is still being directly replaced with vertex so what I'm actually writing to after transpiling is the vec3 vertex variable, not the vertex_interp varying coming from the vertex stage.

- Edited

lunatix I want to tell the code being executed after my custom shader what position it should use for the shadow calculations.

You can't tell it that. Shadow mapping doesn't work like that. It doesn't use pixels rendered in your fragment shader. At all. Any shadows that your voxels cast on other voxels in your volume are responsibility of your raymarcher. And any shadows that they are casting onto other objects can only be properly calculated if a shadow map renderer runs the raymarcher again (from the light's viewpoint) when rendering the shadow depth texture, and write the raymarch results into it. This depth is what needs to be displaced, not pixel's world positions in your fragment shader. Those can't affect the shadow map. Engine could not use this information for really anything further down the pipeline. That's why it doesn't exist as an output from the fragment function.

I think we still not on the same boat but that's okay, I won't discuss this any further because I already solved it on my own with my custom fork.

This fork just makes it possible to manipulate the "vertex" and geometric normal in the custom shader code and makes sure that, if used, afterwards some calculations are repeated to align multiview stuff view vector.

And just to prove it, here is a screenshot and code snippet on how to use it:

void fragment() {

RaymarchHit hit;

mat4 LocalToWorld = VIEW_MATRIX * MODEL_MATRIX;

mat4 WorldToLocal = inverse(MODEL_MATRIX);

vec3 cameraPosLS = TransformWorldToObject(WorldToLocal, CAMERA_POSITION_WORLD);

vec3 rayOrigin = cameraPosLS * VoxelScale + VoxelBoxSize * 0.5;

vec3 vertexPosWS = (INV_VIEW_MATRIX * vec4(VERTEX, 1.0)).xyz;

vec3 cameraDirWS = normalize(vertexPosWS - CAMERA_POSITION_WORLD);

vec3 rayDir = TransformWorldToObjectNormal(WorldToLocal, cameraDirWS);

if (!RaymarchLoop(rayOrigin, rayDir, VoxelBoxSize, DataTexture, hit)) {

discard;

}

float invVoxelScale = 1.0 / VoxelScale;

vec3 localPos = (hit.position - VoxelBoxSize * 0.5) * invVoxelScale;

vec3 worldPos = (LocalToWorld * vec4(localPos, 1.0)).xyz;

vec4 projPos = PROJECTION_MATRIX * vec4(worldPos, 1.0);

ALBEDO = hit.color;

NORMAL = ((mat3(LocalToWorld)) * hit.normal);

DEPTH = (projPos.z / projPos.w);

VERTEX_OUT = worldPos; // this one is new

NORMAL_GEOM = normalize(hit.normal); // this one is new

}So this shader is now able to fake 3D voxel geometry by just rendering an inverse box and raymarching against a 3D texture.

It outputs a custom depth to gl_FragDepth and manipulates parameters in the fragment stage to make shadow mapping work (because it uses z coordinate of the vertex parameter).

And if you still don't believe me, here is a PR in my fork which shows the modifications I had to do: https://github.com/Lunatix89/godot/pull/1/files

Note that while you can't modify the (x,y) position of a fragment, you can modify its depth value.

out float DEPTH

Custom depth value (0..1). If DEPTH is being written to in any shader branch, then you are responsible for setting the DEPTH for all other branches. Otherwise, the graphics API will leave them uninitialized.

https://docs.godotengine.org/en/stable/tutorials/shaders/shader_reference/spatial_shader.html#doc-spatial-shader

(Search for 'depth')

- Edited

lunatix So this shader is now able to fake 3D voxel geometry by just rendering an inverse box and raymarching against a 3D texture.

It outputs a custom depth to gl_FragDepth and manipulates parameters in the fragment stage to make shadow mapping work (because it uses z coordinate of the vertex parameter).

I doubt you can get correct looking shadows without rendering the volume into shadow map. So that might be the intervention worth a fork.

I'd like to see your solution in motion with rotating volume, changing directional light direction or a couple of moving omni lights, and a 3D texture that has some see-through holes.

I'll take a closer look at this in the next days and will post an update.

I had the same shader in Unity and it worked pretty well, however, I always used the deferred rendering path and forward only for transparents.

have you looked into parallax occlusion mapping?

- Edited

lunatix I had the same shader in Unity and it worked pretty well, however, I always used the deferred rendering path and forward only for transparents.

Rendering path wouldn't make much difference. For volume's shadow to be cast correctly, the volume needs to be rendered into shadow map by a raymarching shader.

If you can tell Unity's pipeline to use your custom raymarching shader when rendering the object into shadow map, then it may be possible to have correct shadows. Afaik, in Godot you can't use custom shadow map shaders.

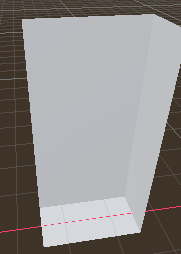

So no amount of post-rasterization manipulation of pixel world positions could do this. There's simply not enough information about the volume there. You might get almost ok looking shadow shapes for voxel distributions that are close to completely filled box. This is precisely what you've shown in your last screenshot. But even that shadow doesn't look correct. Compare it to just regular boxes in same positions.

Here's a thought experiment. You can even try it with your Unity shader to see if it indeed does things correctly, which I'm doubtful of.

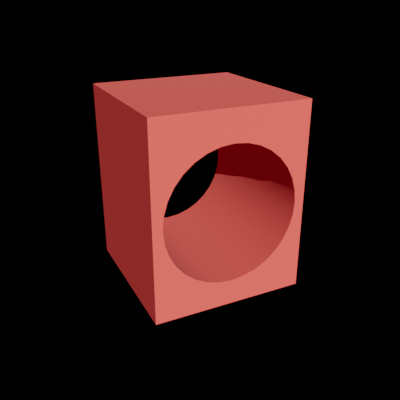

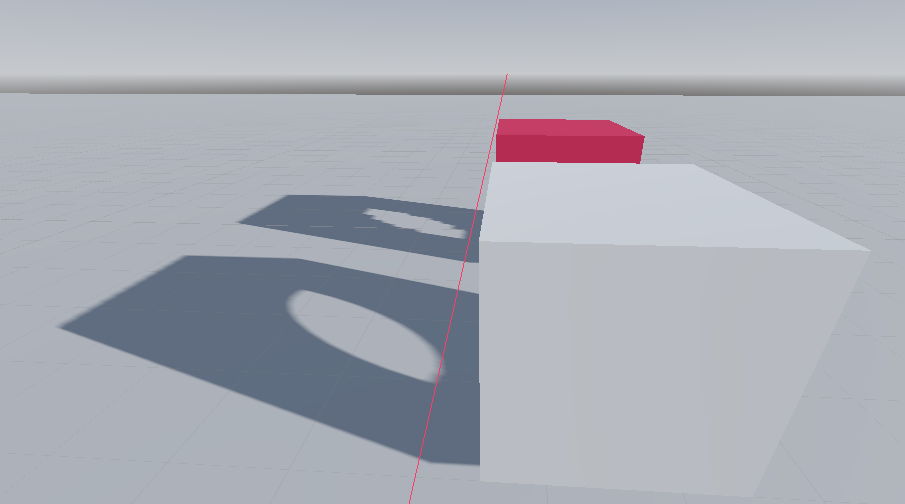

Take a 3D texture that is full block with a hole going all the way through from one side to another. Like this:

Position the camera so it doesn't see the hole. It will render something like this:

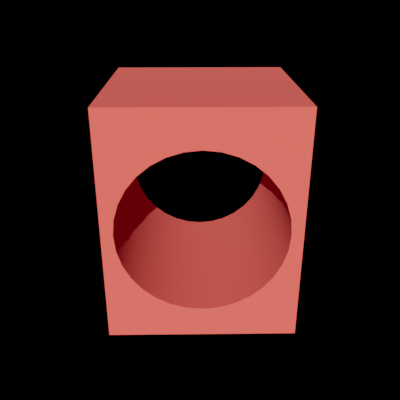

Now shine a directional light sideways in the direction of the hole. The light "sees" something like this:

The proper shadow would then look something like this:

As you can see, the pixels rendered from the camera view can't store any information about the hole. That information can be only obtained by looking from the light's point of view. And that's precisely what the shadow map is - the look at objects from light's perspective. The renderer needs that information to properly render shadows, and you cannot supply it only via pixels seen by the camera.

- Edited

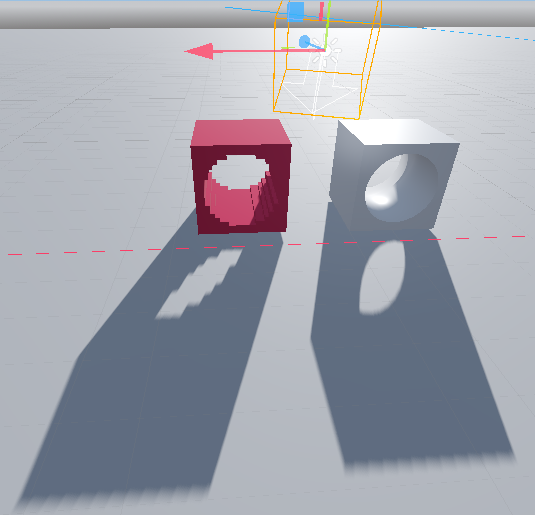

I've reproduced your experiment and it looks like everything is working fine because the shadow map actually uses my custom shader for rendering.

And a more complex geometry.

The only thing which is tricky is the bias because self shadowing doesn't look so nice with voxel geometry.

- Edited

lunatix Great! Brownie points for Godot. And you as well

I'm just curious why did you need that fork really. It's enough for raymarch shader to write volumetric pixel depth into z buffer by simply assigning to built-in DEPTH. If such shader is invoked for both; camera and shadow map, then no other intervention is needed.

Thanks a lot

The fork was needed because I could not tell the code executed after my raymarching shader to use the raymarched position instead of the one passed from vertex to fragment shader.

There are several places which use the fragment position to calculate things like fog, lighting, shadows and so on and all those functions would calculate with the interpolated vertex position of the cube I use as a volume to raymarch.

However, that would end up in wrong lighting and shadowing because the z coordinate from the vertex is used to find out in which shadow cascade the fragment lies within and it's also used to get the PSSM coords.

So that's why it looks wrong in this screenshot where I could not provide the raymarched position:

You can see clearly that the actual box was used to calculate which fragment is in shadow (wrong angle but I think you'll get the point).

In Unity this was easy to do because it would let custom shaders use a function to calculate the fragments color, lighting, shadow, metallic and so on because there was a function provided for it which one can pass position, albedo, normal etc. but Godot is not that flexible here.

However, I don't know how much value this would bring to the general purpose user so I'm not sure whether it would be usefull to open a PR to the original Godot engine.

But I could imagine that it might also be useful for rendering SDF volumes or raytracing in the fragment shader, like screen space shadows or screen space reflections.