have you looked into parallax occlusion mapping?

How to manipulate actual vertex position in fragment shader

- Edited

lunatix I had the same shader in Unity and it worked pretty well, however, I always used the deferred rendering path and forward only for transparents.

Rendering path wouldn't make much difference. For volume's shadow to be cast correctly, the volume needs to be rendered into shadow map by a raymarching shader.

If you can tell Unity's pipeline to use your custom raymarching shader when rendering the object into shadow map, then it may be possible to have correct shadows. Afaik, in Godot you can't use custom shadow map shaders.

So no amount of post-rasterization manipulation of pixel world positions could do this. There's simply not enough information about the volume there. You might get almost ok looking shadow shapes for voxel distributions that are close to completely filled box. This is precisely what you've shown in your last screenshot. But even that shadow doesn't look correct. Compare it to just regular boxes in same positions.

Here's a thought experiment. You can even try it with your Unity shader to see if it indeed does things correctly, which I'm doubtful of.

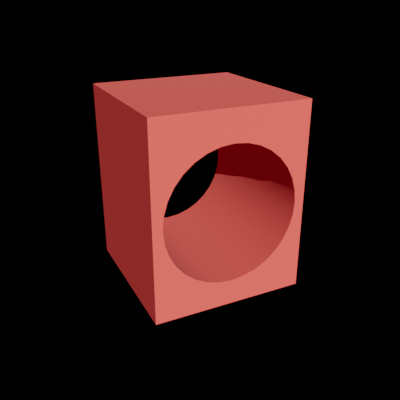

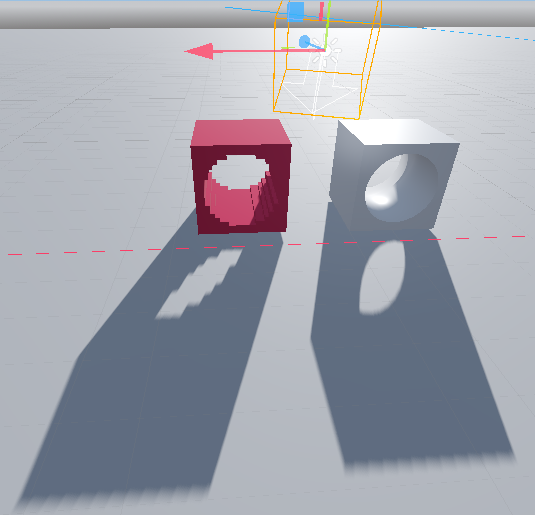

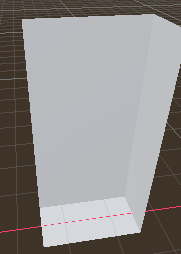

Take a 3D texture that is full block with a hole going all the way through from one side to another. Like this:

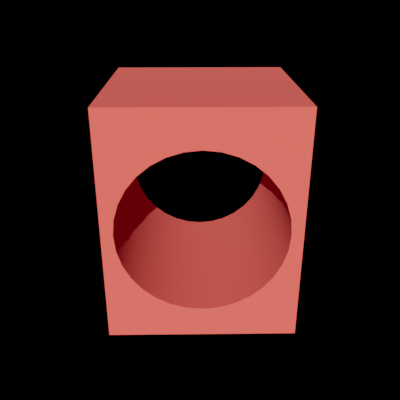

Position the camera so it doesn't see the hole. It will render something like this:

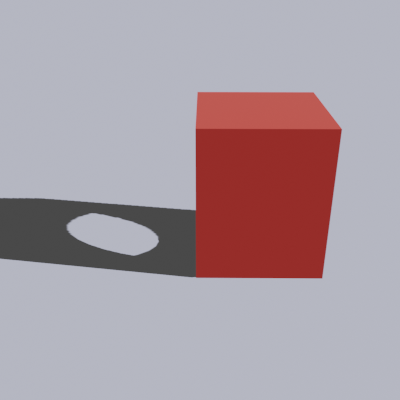

Now shine a directional light sideways in the direction of the hole. The light "sees" something like this:

The proper shadow would then look something like this:

As you can see, the pixels rendered from the camera view can't store any information about the hole. That information can be only obtained by looking from the light's point of view. And that's precisely what the shadow map is - the look at objects from light's perspective. The renderer needs that information to properly render shadows, and you cannot supply it only via pixels seen by the camera.

- Edited

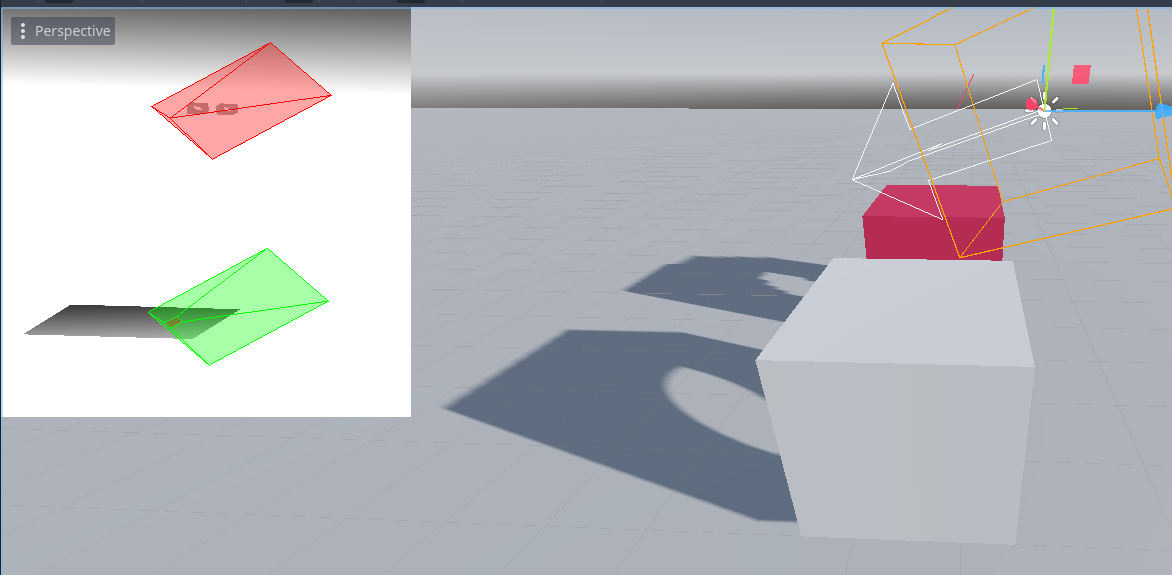

I've reproduced your experiment and it looks like everything is working fine because the shadow map actually uses my custom shader for rendering.

And a more complex geometry.

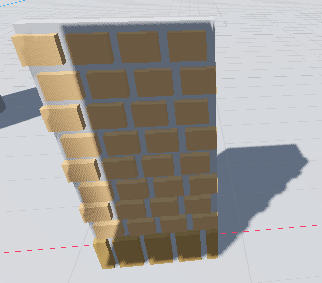

The only thing which is tricky is the bias because self shadowing doesn't look so nice with voxel geometry.

- Edited

lunatix Great! Brownie points for Godot. And you as well

I'm just curious why did you need that fork really. It's enough for raymarch shader to write volumetric pixel depth into z buffer by simply assigning to built-in DEPTH. If such shader is invoked for both; camera and shadow map, then no other intervention is needed.

Thanks a lot

The fork was needed because I could not tell the code executed after my raymarching shader to use the raymarched position instead of the one passed from vertex to fragment shader.

There are several places which use the fragment position to calculate things like fog, lighting, shadows and so on and all those functions would calculate with the interpolated vertex position of the cube I use as a volume to raymarch.

However, that would end up in wrong lighting and shadowing because the z coordinate from the vertex is used to find out in which shadow cascade the fragment lies within and it's also used to get the PSSM coords.

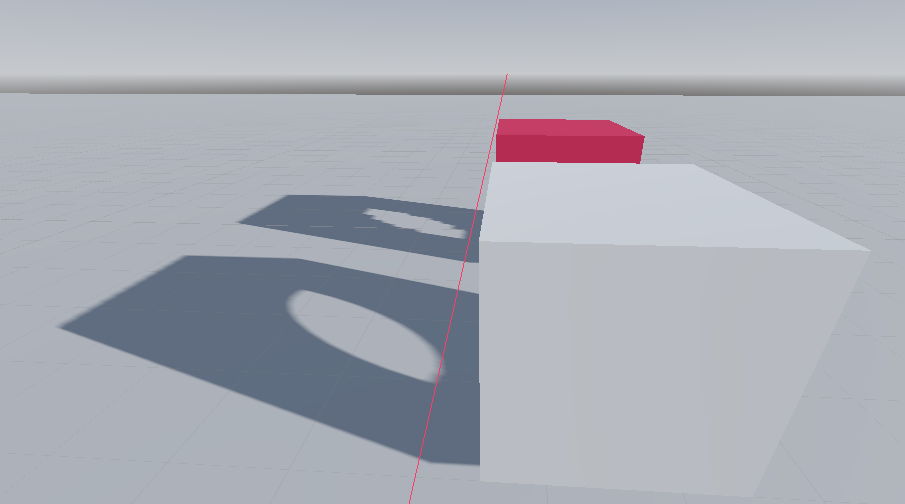

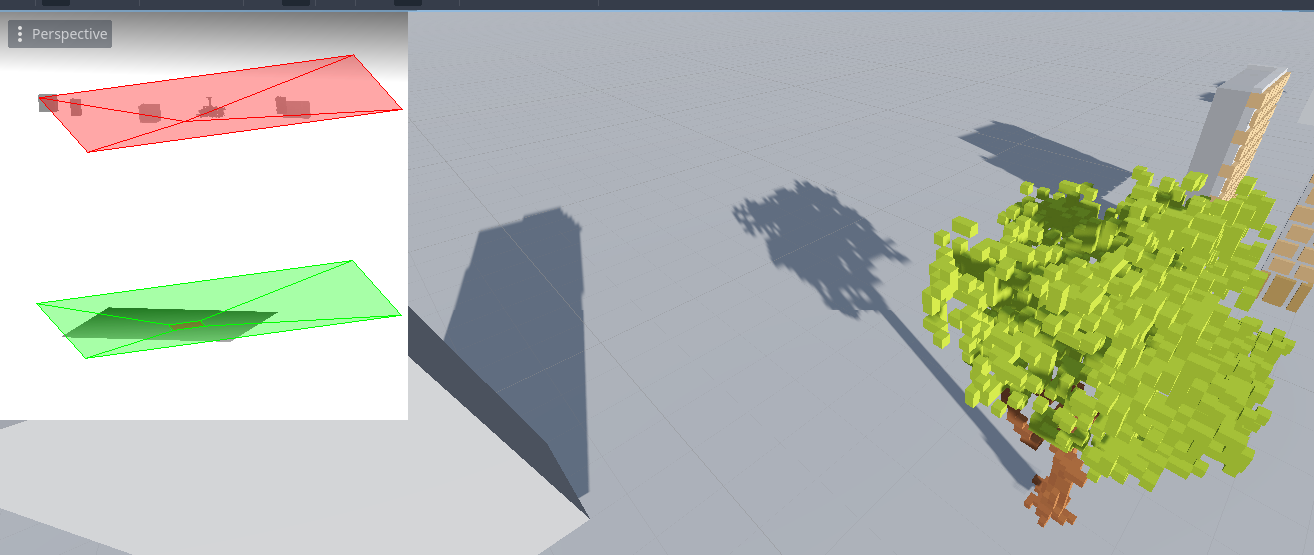

So that's why it looks wrong in this screenshot where I could not provide the raymarched position:

You can see clearly that the actual box was used to calculate which fragment is in shadow (wrong angle but I think you'll get the point).

In Unity this was easy to do because it would let custom shaders use a function to calculate the fragments color, lighting, shadow, metallic and so on because there was a function provided for it which one can pass position, albedo, normal etc. but Godot is not that flexible here.

However, I don't know how much value this would bring to the general purpose user so I'm not sure whether it would be usefull to open a PR to the original Godot engine.

But I could imagine that it might also be useful for rendering SDF volumes or raytracing in the fragment shader, like screen space shadows or screen space reflections.

- Edited

lunatix Oh, I finally grok what you were talking about. Your terminology was confusing. You want to be able to alter fragment's world position used by Godot further down the pipeline.

This is indeed useful for any kind of volumetric rendering. In fact it may be essential to make volumetrics play nice with the rest of the scene. You should definitely open a PR or at least a feature request to see how the devs would react and get some discussion going.

VERTEX_OUT is not a good name though. It should be something like FRAGMENT_WORLD

Yes, fragment world position is a good name for it.. in unity they always just use the term "position" in fragment shaders, like "positionWS" (world space), positionSS (screen space) or positionOS (object space) etc.

And you're right, VERTEX_OUT is bad and I knew that but I did not had a better term for it yet

Just found out after opening the PR that there is already another user who wanted to achieve the same: https://github.com/godotengine/godot/pull/65307

This user also wrote a really nice addon which uses the DDA algorithm but the plugin won't work until the PR has been merged into the main repo.

I think I like this users solution more because there is actually no need to supply the fragments world position since it can be reconstructed from linear depth and I really like the conclusion that you can't modify the position of a fragment in 3D space to anything you want to, you can only modify it along the ray i.e. farther or nearer to the camera.

However, it looks like the Godot core devs did not yet came to a solution and the team is currently focusing on the 4.2 release so I will maybe just continue to use a fork and stick to this users solution as it is way more optimized than my version was.