- Edited

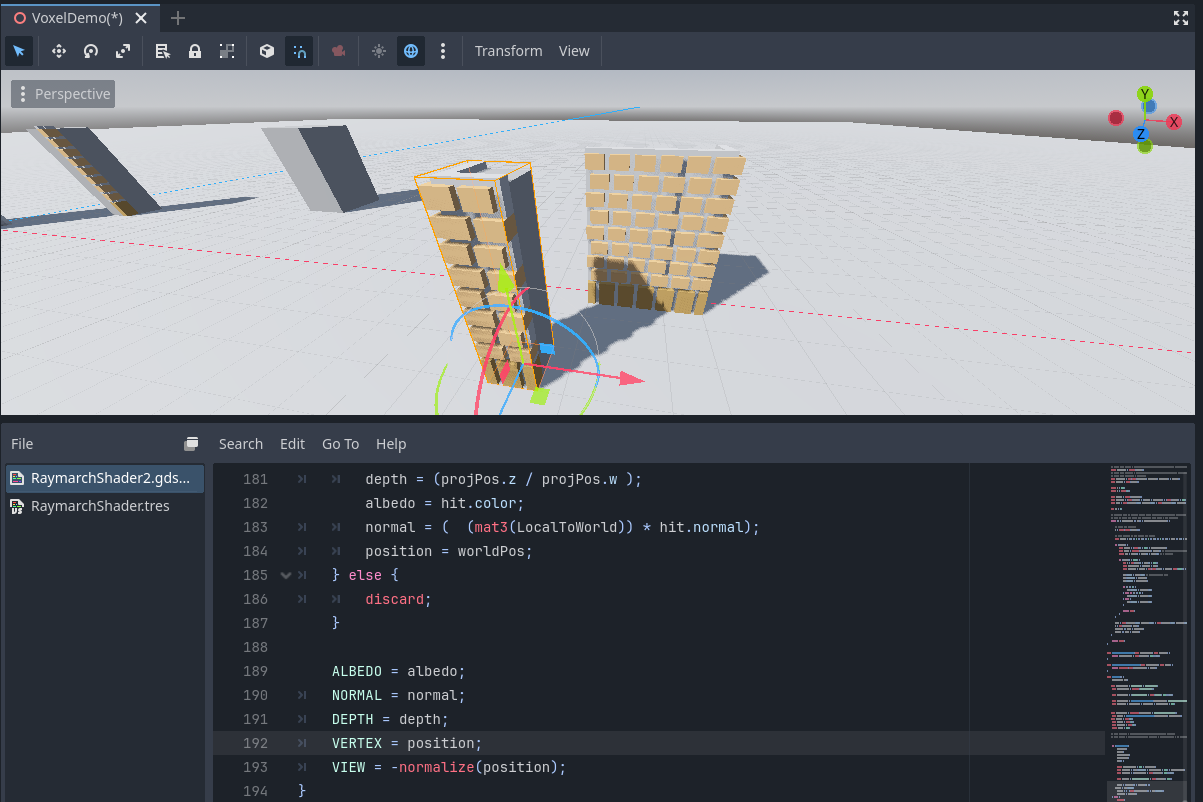

I'm currently working on a voxel raymarching shader where I render an inverse box with a 3D texture which holds the voxel data.

Everything works quiete well, I can raymarch against the voxels in local space and got all outputs like color, normal, depth, position etc.

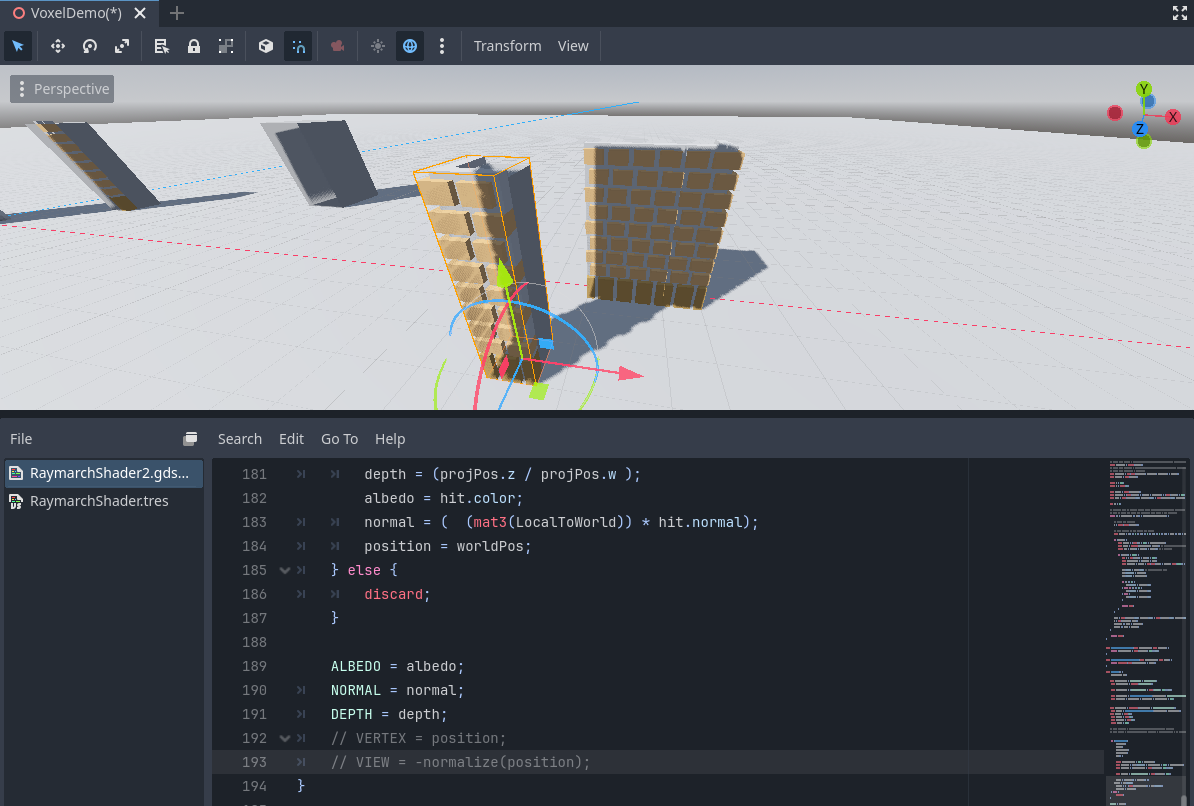

However, using a spatial shader in godot, I'm not able to change the VERTEX constant to the actual world position I've calculated in the fragment shader code (as it obviously states that "Constants can not be modified").

I've looked at the actual compiled shader via RenderDoc and to me it seems that it should be fairly easy to change the "vertex" variable but the shader editor just won't let met do this.

So my question is - is there anything I can do or do?

Do I have to change the actual engine code so VERTEX becomes a parameter rather than a constant?

Some (uncomplete) code for reference:

void fragment() {

RaymarchHit hit;mat4 LocalToWorld = VIEW_MATRIX * MODEL_MATRIX; mat4 WorldToLocal = inverse(MODEL_MATRIX); vec3 vertexPosWS = (INV_VIEW_MATRIX * vec4(VERTEX, 1.0)).xyz; vec3 cameraPosLS = TransformWorldToObject(WorldToLocal, CAMERA_POSITION_WORLD); vec3 rayOrigin = cameraPosLS * VoxelScale + VoxelBoxSize * 0.5; vec3 cameraDirWS = normalize(vertexPosWS - CAMERA_POSITION_WORLD); vec3 rayDir = TransformWorldToObjectNormal(WorldToLocal, cameraDirWS); vec3 albedo = vec3(0); vec3 normal = vec3(0); float depth = 0.0; if (RaymarchLoop( rayOrigin, rayDir, VoxelBoxSize, DataTexture, hit)) { float invVoxelScale = 1.0 / VoxelScale; vec3 localPos = (hit.position - VoxelBoxSize * 0.5) * invVoxelScale; vec3 worldPos = (LocalToWorld * vec4(localPos, 1.0)).xyz; vec4 projPos = PROJECTION_MATRIX * vec4(worldPos, 1.0); depth = (projPos.z / projPos.w ); albedo = hit.color; normal = ( (mat3(LocalToWorld)) * hit.normal); // Something like this, might be transformed to another space first but you get the point: // VERTEX = worldPos; // VIEW = -normalize(worldPos); } else { discard; } ALBEDO = albedo; NORMAL = normal; DEPTH = depth;}