VERTEX is a placeholder in a gdshader and will be replaced with "vertex" when transpiling from godots shader language to actual glsl.

And vertex is a variable of type vec3, by default assigned to vertex_interp.

So in my opinion the name is a little misleading, using something like position would be better in terms of fragment shader.

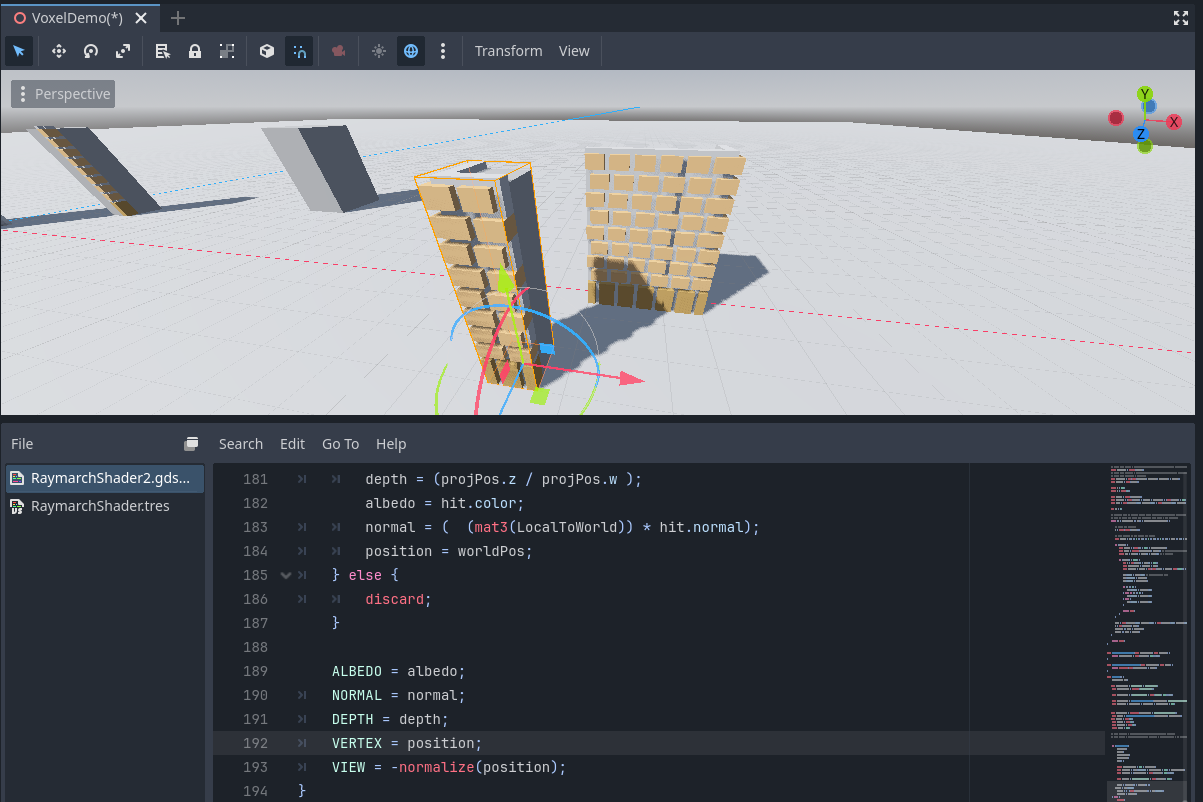

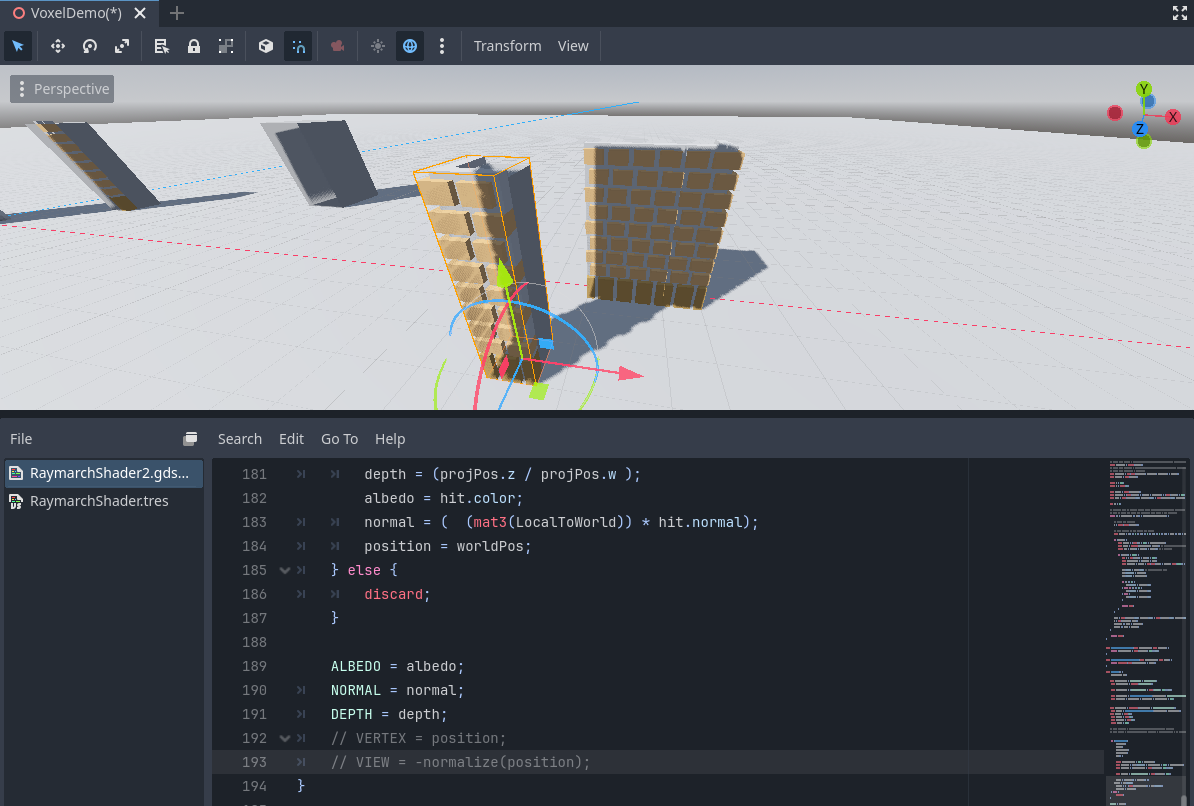

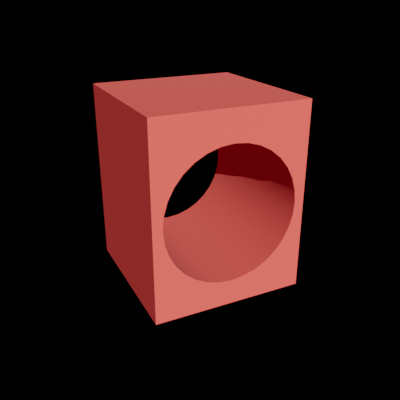

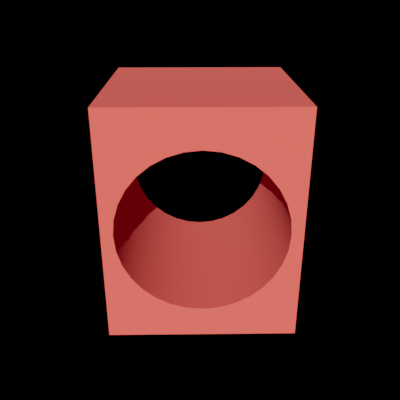

However, that is not important at all as my shader needs to modify this variable but because it's defined as a constant in gdshader language, one just can't write to it without getting a compile (transpile) error while it should be possible to do.

I've already forked the repo and playing around with it because, from looking at "scene_forward_clustered.glsl", it should be fairly straight forward to make it overridable in a gdshader.

Excerpt from "scene_forward_clustered.glsl":

void fragment_shader(in SceneData scene_data) {

uint instance_index = instance_index_interp;

//lay out everything, whatever is unused is optimized away anyway

vec3 vertex = vertex_interp;From "shader_types.h":

shader_modes[RS::SHADER_SPATIAL].functions["fragment"].built_ins["VERTEX"] = constt(ShaderLanguage::TYPE_VEC3);

sader_modes[RS::SHADER_SPATIAL].functions["fragment"].built_ins["VIEW"] = constt(ShaderLanguage::TYPE_VEC3;From "scene_shader_forward_clustered.cpp":

actions.renames["VERTEX"] = "vertex";

actions.renames["VIEW"] = "view";So, in short, "VERTEX" directly translated to "vertex".

It's just a misleading variable name and should not be marked as const rather than a parameter so fragment shaders can actually manipulate the interpolated fragment position to archieve certain effects.

And I think I've given the answer to my question myself now, as I looked at the code and simply do not see any way of archieving this except forking the project and either use my customized version or create a PR to godot and hopefully get it approved.