Hey y'all,

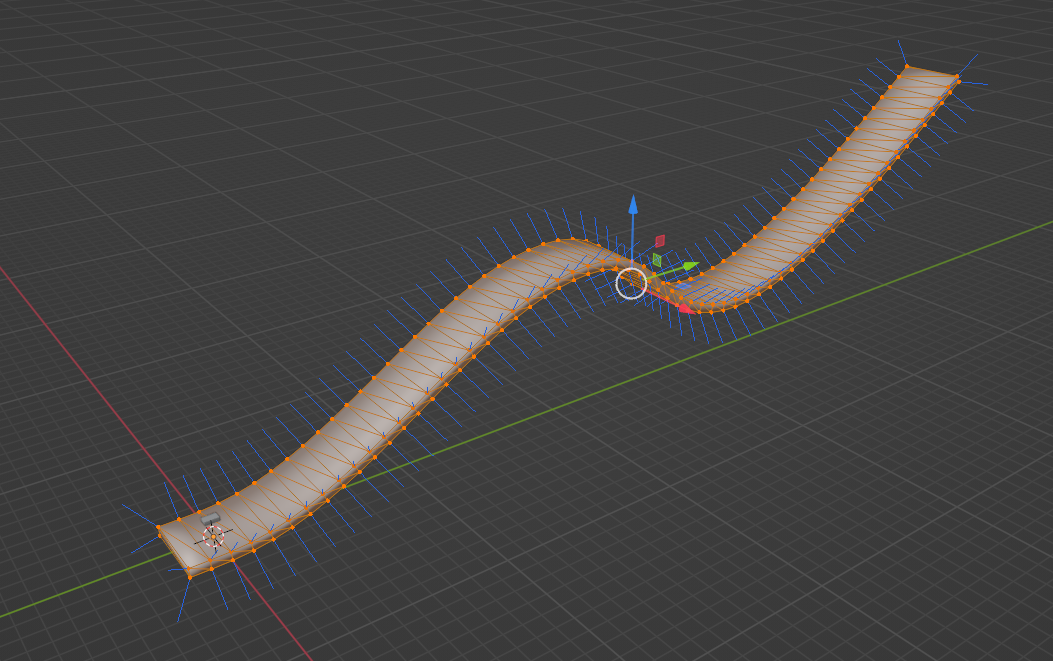

I wanted to experiment with the new barycentric coordinate function coming in 4.2, but I seem to be getting some odd results with meshes imported from Blender. In a new project I'm using the code found in the example of this pull request (#71233), but with a small modification to allow for controlling the traveling object with the arrow keys; as opposed to an AnimationPlayer. This appears to work correctly with the track mesh provided in the example:

To double check my interpretation of the code, I made the same modification to the example project and it also works.

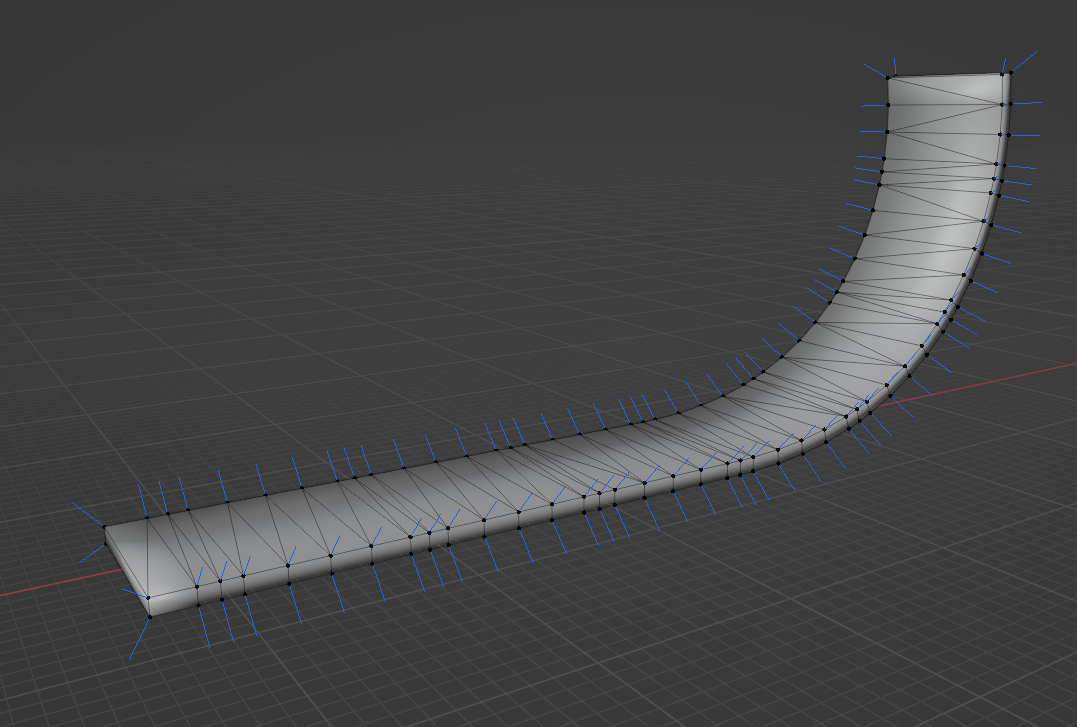

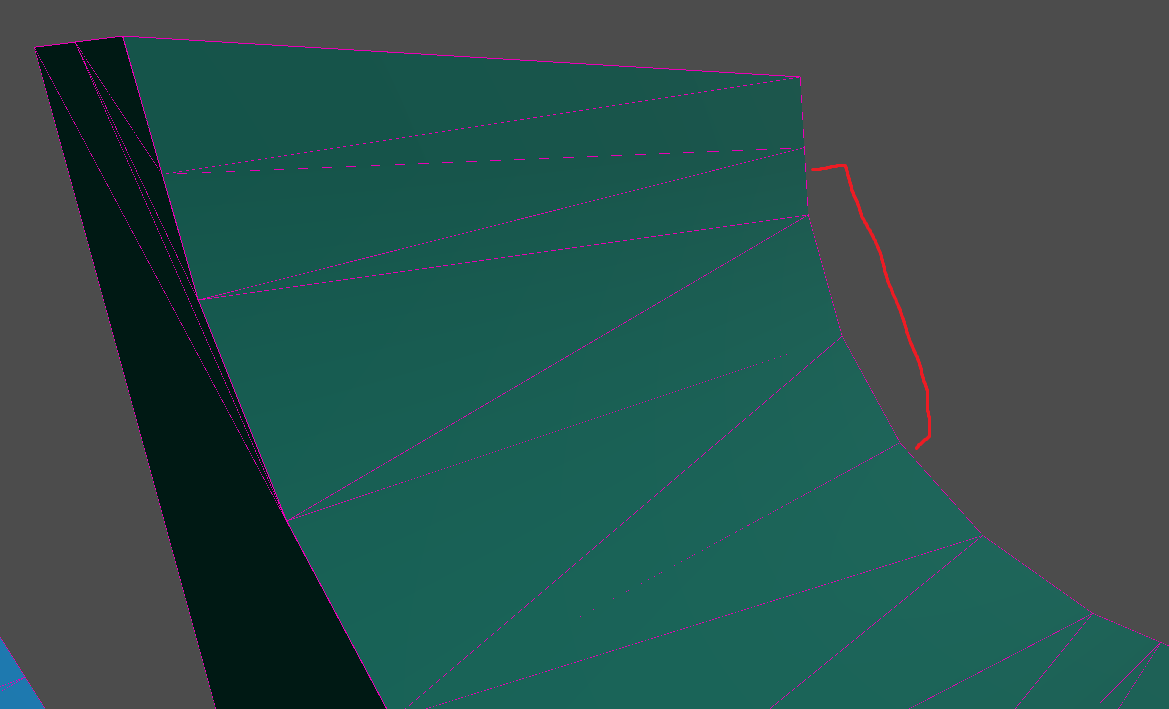

However upon trying a different track mesh imported from Blender (3.4.0), I get erratic behavior:

Leading up the ramp, the traveling object begins to jitter and doesn't align with the surface. When traveling to the left, it slowly begins to angle to the right even through the track mesh is flat...

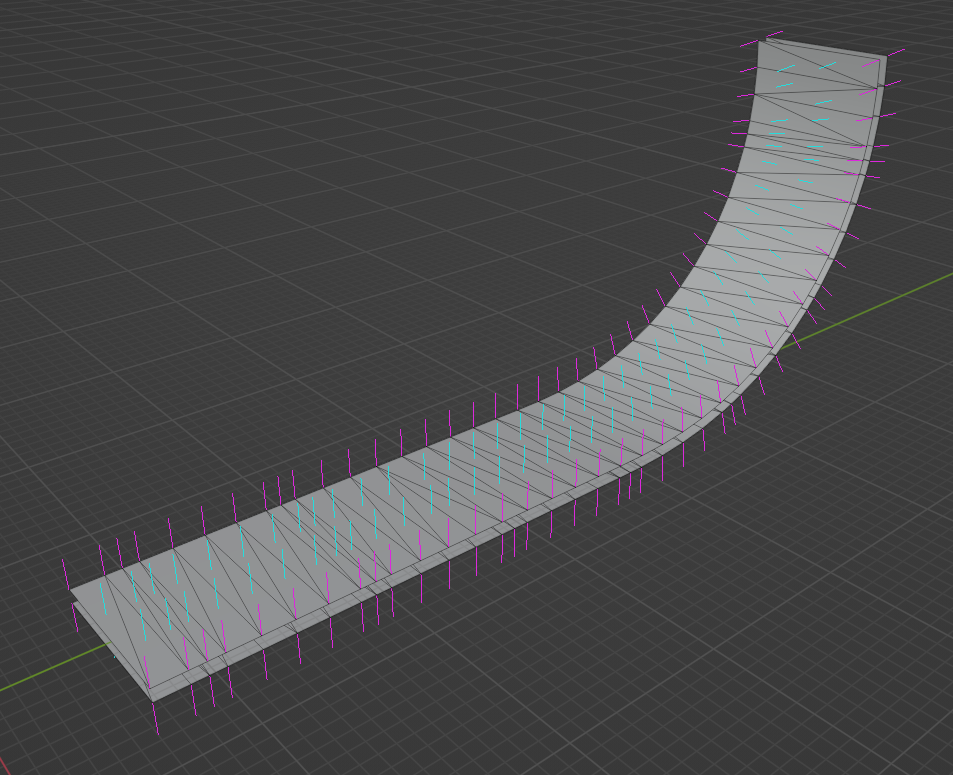

It seems the normal data is bad? I tried exporting the mesh in different file formats (.glTF 2.0, .escn, and .obj), but regardless the behavior was the same or worse. I also tried triangulating the mesh in Blender with preserved normals, and made sure to have smooth shading enabled. To make things more confusing, when the barycentric function is disabled so we're using just the face normal of the track mesh, we do get the correct direction and the traveling object aligns properly (though without the smooth interpolation of course):

Granted, I'm fairly new to Godot (coming from a Unreal background) and am likely missing an import/export setting or not preparing the mesh properly. If any help or suggestions on how to fix this would be deeply appreciated, thanks!