Scylla-Leeezard If you need you're mesh to be rotated, translated, etc., then you're gonna need to do that in Blender not in Godot.

Or just inverse transform the returned coordinate from mesh's local space to global space.

Scylla-Leeezard If you need you're mesh to be rotated, translated, etc., then you're gonna need to do that in Blender not in Godot.

Or just inverse transform the returned coordinate from mesh's local space to global space.

Scylla-Leeezard Thanks for the reply! Better late than never.

That would explain a lot, if it ignores mesh transformations. I take it you could grab the scale/rotation from the node and apply those to the function's result to get the "correct" gravity vector? (Unless that's what xyz is already referring to. I may be too green to know the difference.)

chetbeigemeister Geometry3D.get_triangle_barycentric_coords() is a generalized function that doesn't know anything about transformations. It just returns barycentric interpolation coords for a given point in a triangle. It's responsibility of the caller to put all arguments into the same transformations space. Otherwise the results won't be correct if mesh instance have any non-identity transforms.

The original code made a mistake of taking the triangle coordinates in mesh/object space while keeping the interpolated point position (gotten from raycasting) in global space.

If you need the normal in global space you can either:

1) transform the triangle vertices from mesh space to global space prior to passing them to bary function, using mesh instance's global_transform. And then transform vertex normals from mesh space to global space prior to interpolating them to get the final normal.

Or

2) transform the raycast point from global space to mesh space, get barycentric coordinates in the mesh space and interpolate the normal in the mesh space as well. Then transform the final normal from mesh space to global space, again using mesh instance's global_transform.

xyz Assuming the result is for calculating direction of gravity (meaning it's normalized, so position/scale is irrelevant) would it be adequate to simply take the Euler rotation of the mesh to transform the result from get_triangle_barycentric_coords()?

Apologies if this is just a rehash of what you're already saying. Some of this is is going over my head, I'm trying to dumb it down for myself.

chetbeigemeister would it be adequate to simply take the Euler rotation of the mesh to transform the result from get_triangle_barycentric_coords()?

No, you need the whole transformation matrix. But this is trivial, you just multiply the vertex with mesh node's global_transform or its inverse to transform back.

xyz Hello! I am so sorry to necropost; however, you are the only person I've been able to find giving good advice on this topic.

I'm getting similar results (mainly post 4) as OP using mostly similar code. I've experimented with your answer and I cannot seem to figure out where exactly you would convert between local and global spaces. I've tried to think about it logically, but I can't seem to get it right.

Could one just multiply the vertices in the for loop by global_transform and leave it that? For example: var vertices: Array[Vector3] = other.get_vertex_positions_at_face_index(ray_cast.get_collision_face_index()) * global_transform

When you say "transform vertex normals from mesh space to global space prior to interpolating them to get the final normal," are you saying that you could do the same thing as the vertices array (multiply the normals during iteration by global_transform)? I think what I'm not understanding is the "prior to interpolation" part - as in, what step in the process that would be. Wouldn't that be when OP calls and passes the normal align_up_direction?

xyz Yes it does!

To give you an idea of what is working, here is the core code. Adapted from OP's code, but I made the global changes.

You can see I multiply by each vertex by GlobalTransform, and I also multiply it to upNormal when I pass it to SetUpDirection().

Vector3[] vertices = new Vector3[3];

Vector3[] normals = new Vector3[3];

for (int i = 0; i < 3; i++)

{

vertices[i] = meshData.GetVertex(meshData.GetFaceVertex(normRay.GetCollisionFaceIndex(), i)) * GlobalTransform;

normals[i] = meshData.GetVertexNormal(meshData.GetFaceVertex(normRay.GetCollisionFaceIndex(), i));

}

Vector3 baryCoords = Geometry3D.GetTriangleBarycentricCoords(normRay.GetCollisionPoint(), vertices[0], vertices[1], vertices[2]);

Vector3 upNormal = (normals[0] * baryCoords.X) + (normals[1] * baryCoords.Y) + (normals[2] * baryCoords.Z);

upNormal = upNormal.Normalized();

SetUpDirection(upNormal * GlobalTransform, delta);In SetUpDirection(), you can see I'm using GlobalTransform as well.

private void SetUpDirection(Vector3 upNormal, double delta)

{

Transform3D normTransform = GlobalTransform;

normTransform.Basis.Y = upNormal;

normTransform.Basis.X = -normTransform.Basis.Z.Cross(normTransform.Basis.Y);

normTransform = normTransform.Orthonormalized();

GlobalTransform = GlobalTransform.InterpolateWith(normTransform, .5f);

}paftdunk Matrix multiplication is not commutative. The order of operands is important. To transform a vertex by a matrix the order should be matrix * vertex. Your code is doing vertex * matrix. This is equivalent to multiplying with a transposed matrix which would result in inverse transformation (if the matrix is orthonormal).

Also to transform a normal properly you need to nullify the translation part of the matrix or use only 3x3 submatrix aka the basis. So you can do matrix.basis * normal_vector. If there is some proportional scaling in the basis you either need to orthonormalize the basis prior to multiplication or normalize the resulting normal vector. If there is non proportional scaling in the matrix, you need to use transposed inverse of the actual matrix. Since Godot's transform class doesn't implement transpose function, you can do normal_vector * matrix.basis.affine_inverse(), which now reverses the operand order to transpose the matrix.

xyz That makes sense! Matrix math is fairly new to me, so this is certainly trail by fire learning.

I'm getting slightly different results now that I have updated the logic. In particular, correctly transforming the vertices. On its own, this is working great.

vertices[i] = GlobalTransform * meshData.GetVertex(meshData.GetFaceVertex(normRay.GetCollisionFaceIndex(), i));

I believe I am MOSTLY following what you are saying about the normal transformation (I have no scaling). This is what I did:

normals[i] = GlobalTransform.Basis * meshData.GetVertexNormal(meshData.GetFaceVertex(normRay.GetCollisionFaceIndex(), i));

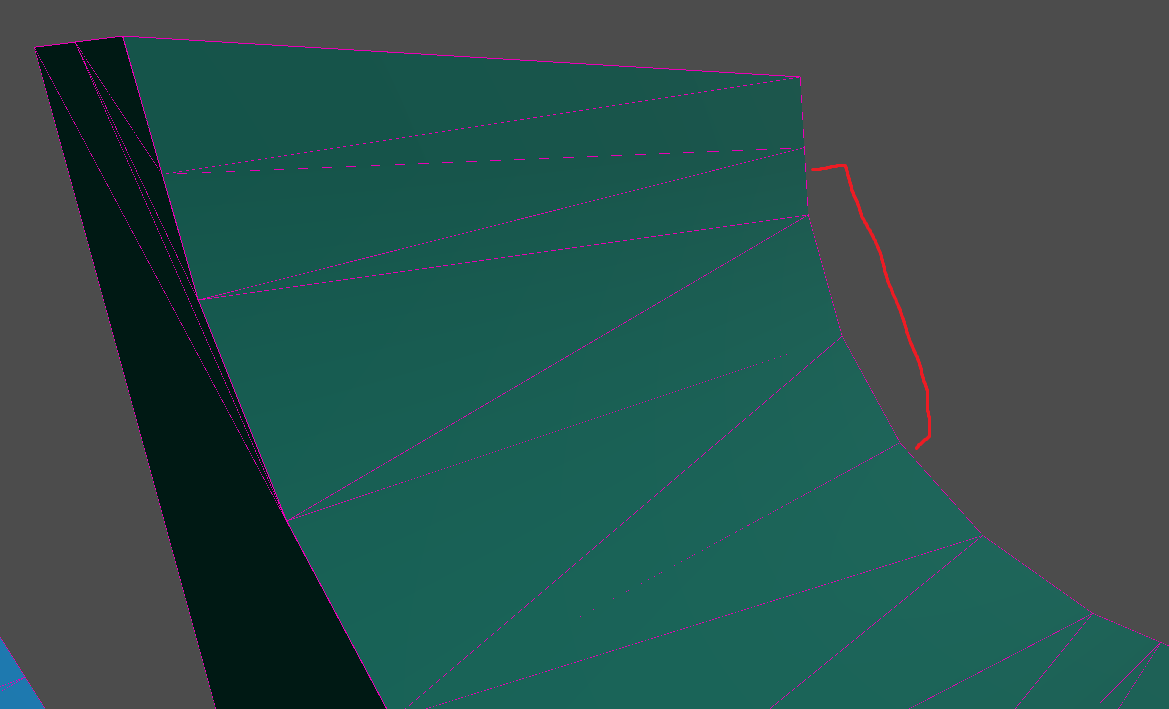

I now get the below result. I am trying to understand the mathematics of this issue here. The mesh I am moving ramps up early, so I was thinking at that point there may be an issue with the vertex transformation. Once it turned around and started shaking erratically, I figured this is something to do with the normals calculation.

If you happen to have any ideas, could you explain mathematically what would cause that shaking? Racked my brain but I cannot think of what that would be (as a side note, I move the moving mesh with my keyboard by updating the position on the z-axis).

paftdunk Post a better image and complete code. Hard to see what's happening there. Remove the interpolation in SetUpDirection for now. First make it work instantly. Just assign the final transform you calculated. Interpolation may introduce other problems. You interpolate barycentric coords anyway so it should work smooth (when on smooth surfaces) without that last interpolation. Always try to isolate the issue into minimum of code. Oh, and you need a second cross product there when calculating the final basis to ensure the wanted orthogonality.

xyz Good point - I did notice that the documentation for getting the barycentric coords mentions that it is already interpolating, so thank you for confirming that. I have removed that - getting SLIGHTLY better looking results.

Could you explain the need for the second cross product?

Here is a video:

Here is the full code:

private void SetUpDirection(Vector3 upNormal, double delta)

{

Transform3D normTransform = GlobalTransform;

normTransform.Basis.Y = upNormal;

normTransform.Basis.X = -normTransform.Basis.Z.Cross(normTransform.Basis.Y);

normTransform = normTransform.Orthonormalized();

GlobalTransform = normTransform;

}

public override void _PhysicsProcess(double delta)

{

if (Input.IsActionPressed("move_test"))

{

Vector3 newPos = GlobalPosition;

newPos.Z -= 6.0f * (float)delta;

GlobalPosition = newPos;

}

if (Input.IsActionPressed("move_test2"))

{

Vector3 newPos = GlobalPosition;

newPos.Z += 6.0f * (float)delta;

GlobalPosition = newPos;

}

if (normRay.IsColliding())

{

CollisionObject3D hit = (CollisionObject3D)normRay.GetCollider();

Vector3 newPos = GlobalPosition;

newPos.Y = normRay.GetCollisionPoint().Y + .1f;

GlobalPosition = newPos;

SetUpDirection(normRay.GetCollisionNormal(), delta);

if (hit.IsInGroup("AlignmentTest"))

{

MeshInstance3D meshInstance = hit.GetNode<MeshInstance3D>("Mesh");

Mesh mesh = meshInstance.Mesh;

meshData.CreateFromSurface((ArrayMesh)mesh, 0);

Vector3[] vertices = new Vector3[3];

Vector3[] normals = new Vector3[3];

for (int i = 0; i < 3; i++)

{

vertices[i] = GlobalTransform * meshData.GetVertex(meshData.GetFaceVertex(normRay.GetCollisionFaceIndex(), i));

normals[i] = GlobalTransform.Basis * meshData.GetVertexNormal(meshData.GetFaceVertex(normRay.GetCollisionFaceIndex(), i));

}

Vector3 baryCoords = Geometry3D.GetTriangleBarycentricCoords(normRay.GetCollisionPoint(), vertices[0], vertices[1], vertices[2]);

Vector3 upNormal = (normals[0] * baryCoords.X) + (normals[1] * baryCoords.Y) + (normals[2] * baryCoords.Z);

upNormal = upNormal.Normalized();

SetUpDirection(upNormal, delta);

}

}

}paftdunk Could you explain the need for the second cross product?

I explained it. It ensures the orthogonality of the basis vector. orthonormalized() does that as well but it does not guarantee the order in which it does the cross products. So if your basis vectors are not perpendicular when calling orthonormalized() the function will have to change the direction of some of them to make them perpendicular, but you don't know which ones will be changed. It may happen to be y which is your interpolated normal. You don't want that to be changed. So better do it yourself using two cross products so that you end up with the orthogonal vector and use orthonormalized() only to normalize the vector lengths.

So:

y = normal

x = y cross z (or as you've put it -(z cross y)

z = x cross y

orthonormalize

xyz I gotcha now, thank you! I've learned a lot math-wise through you, so I appreciate that and I'm going to continue reading/practicing with it so maybe one day I'll be able to tackle this since I just cannot get it working.

I'm going to go back to the drawing board and figure out a new approach to this problem.

paftdunk Normals/vertices need to be transformed from collider's space to global space. Not from skate's space which you're currently doing. That doesn't make much sense. So the matrix/basis you're multiplying them with needs to be collider's, not skate's.

You copypasted my pseudocode without thinking. I used GlobalTransform as a generalization, hoping we're understanding that this is GlobalTransform of whichever object triangles belong to.

xyz Wow, I feel dumb. This makes sense and is what I mean by trying to do things I do not understand, and just copying pseudocode in a desperate attempt to do so.

Thank you so much again. I have one more thing otherwise I'm going to ask for your PayPal and pay you for your time.

I assume this isn't a "bug", but here's what I am working with now (code is the same, I just corrected the GlobalTransform to hit.GlobalTransform):

On the first ramp, you can see that it either goes through the mesh OR moves very quickly ahead and off track if I move it slow enough. The collision faces look good to me - but I'm wondering maybe if it is because of the sharpness of the angle moving up?

I would assume this is related: when I get close to an edge, the board starts to rotate 90 degrees. I get that the edge face is perpendicular to the current one; however, I'm not sure why it'd rotate along the y-axis and not the x in this case. What would cause this, either on my end or mathematically?

Again, thank you for your time. You are awesome.

paftdunk Well you're trying to move the skate only in global horizontal plane. That will seemingly work only when on near horizontal surfaces. The steeper it gets the glitchier it becomes. Since you're changing skate's orientation in 3d space, it needs to move "forward" from its own point of view. This "forward" changes as your rotate the basis. So to go "forward" you need to move it along its z basis vector. Ditto for y when "vertically" constraining to a collider. You need to move along the normal (or y basis), not along global y axis. If done properly the skate should go smoothly around a sphere without glitches.

Hard edges will obviously be a problem that needs some additional care, but that's for another topic.

xyz AWESOME. I'm able to fully traverse a sphere now!! This should be my last question to put everything together. I understand using the basis to move (as you said, we need to take the rotation into account).

Here's my code (I'm using CharacterBody3D in my actual project, but the code is mostly the same as in the demo project, just using move_and_slide() to handle movement):

Vector3 velocity = Vector3.Zero;

// Handle rotation based off of user input

Basis newBasis = Transform.Basis.Rotated(Transform.Basis.Y, turnInput * TurnRate * (float)delta);

Transform = new Transform3D(newBasis, Transform.Origin);

....bary normal collision code etc....

// Forward movement

velocity = -Transform.Basis.Z * forwardSpeed;

Velocity = velocity;

MoveAndSlide();I believe I implemented the forward movement correctly. The only thing I cannot think of how to do is do this on the y-axis, especially after setting the velocity to -basis.z * speed. I'm not exactly sure how to do this with the basis.

Would it be something similar to creating a new Transform3D, setting the y-basis based on the up normal and the position in the world like basis.y = normal...? I think I'm just struggling to imagine what the implementation would look like in code - reason being, I can't think of what I'd set the basis to/how I'd use it with movement calculations. All I can think to do is position.y = normal.get_collision_point() + ...

After this I'll have everything I need, so I'll work to figure out the hard edges etc. on my own (will start new thread if that happens)