- Edited

I was trying to do some custom mesh making, originally by creating a variably tall cylinder and then deforming the vertices at different levels. However I didn't see that scaling very well for my goals.

My new attempt is to take a file exported from blender, create multiple instances of it, deforming each level based on my code, and then merging it into one mesh.

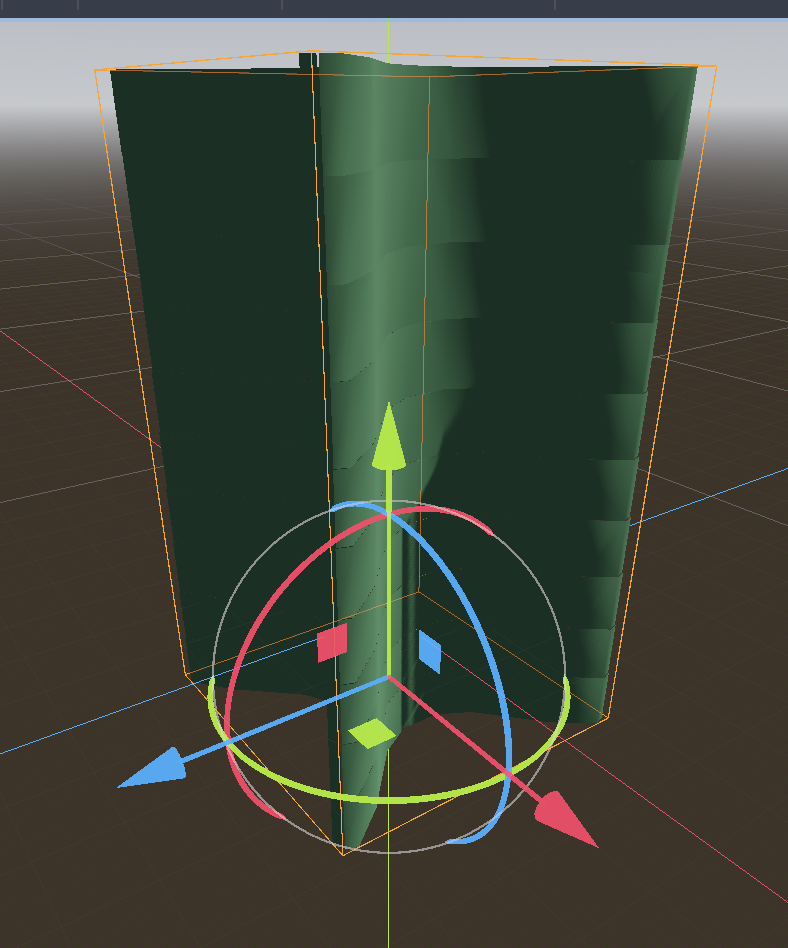

The exported model is more or less like an uncapped cylinder for the most part.

The logic is to take the mesh, offset the MeshInstance3D vertically based on its offset, and then use SurfaceTool to add them together.

func combineMeshes(meshes: Array):

print("Stitching %s meshes" % meshes.size())

var surfaceTool = SurfaceTool.new()

surfaceTool.begin(Mesh.PRIMITIVE_TRIANGLES)

for instance in meshes:

assert(instance is MeshInstance3D, "not given a MeshInstance3D")

surfaceTool.append_from(instance.mesh, 0, instance.transform)

surfaceTool.generate_normals()

self.meshInstance.mesh = surfaceTool.commit()It basically works, in that it creates the instances of the mesh, deforms them, and combines them vertically in a column.

The issue is that even though they are in the same Mesh they don't connect to each other. There is a very clear micro-line separating each one and the lighting makes it even more obvious.

Issue 1: This was especially bad when I was modulating the rotation of the levels slightly which I don't don't anymore.

Issue 2: It was also an issue when I hadn't perfectly leveled the points of my model, which made the aa_bb of the mesh go way off because there was a high point in my model that broke alignment. I feel like even if that was a thing if it was stitching the meshes together it would be joined but just as an artifact anyway.

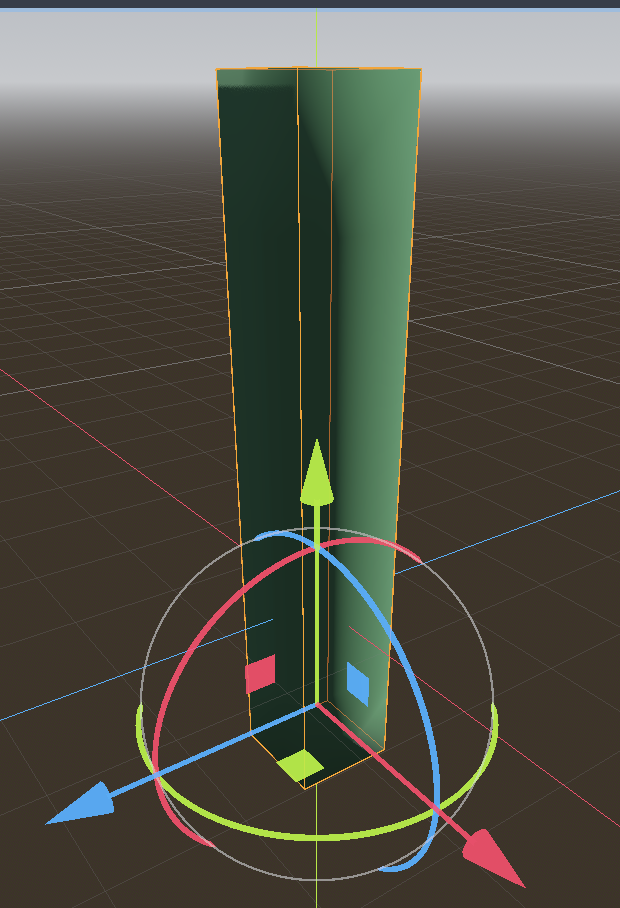

I then just tried doing it with a BoxMesh and it actually works perfectly. Here is an example of 6 boxes on top of each other.

My thought is that the vertexes of the BoxMesh just align with each other enough that the system considers them contiguous when they are added to SurfaceTool. And maybe the triangle count of my Blender mesh just happens to miss that? But if that were the case I'd kind of imagine more glitchiness than what I am seeing.

I'd like to just have logic to stitch them together between adding meshes but I don't exactly know how I can do this.