Hi, my goal is to write a shader for a smooth gradient.

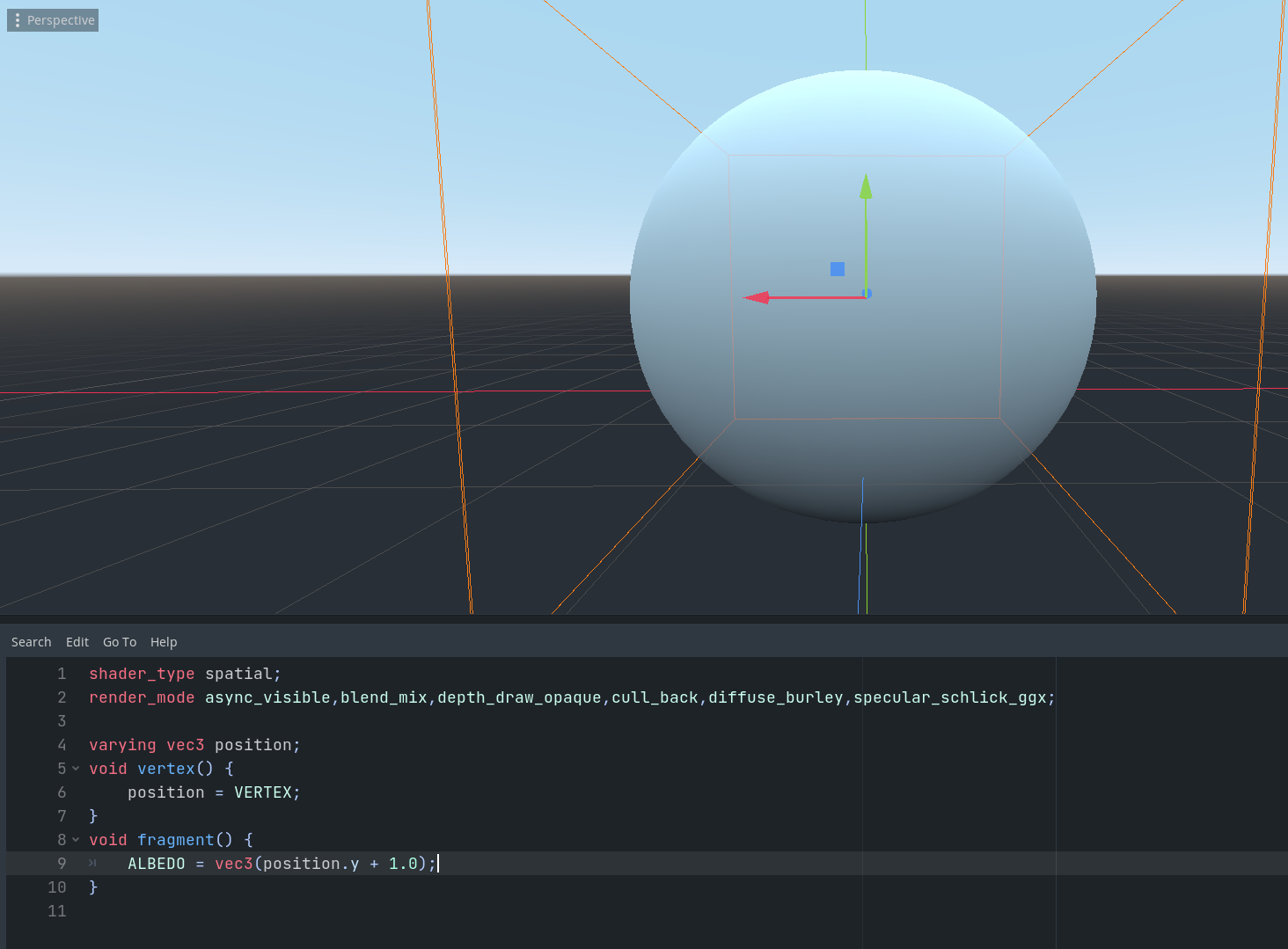

For example I would like to be able to color a sphere so that it's bottom is completely black and its top completely white, like this:

My problem is that the coordinates in the VERTEX built-in are in the local Mesh space. This means that coordinates are given from some origin and can be both >1 and negative.

The example from above only works since I've had this magic + 1.0 in the shader-code and the sphere had a radius of 1.0.

If I make the sphere larger without changing the shader, the gradient changes:

Is there a way to normalize these VERTEX coordinates to [0..1] in the shader? My goal would be that I could put this gradient shader on meshes of different sizes.