- Edited

Hello,

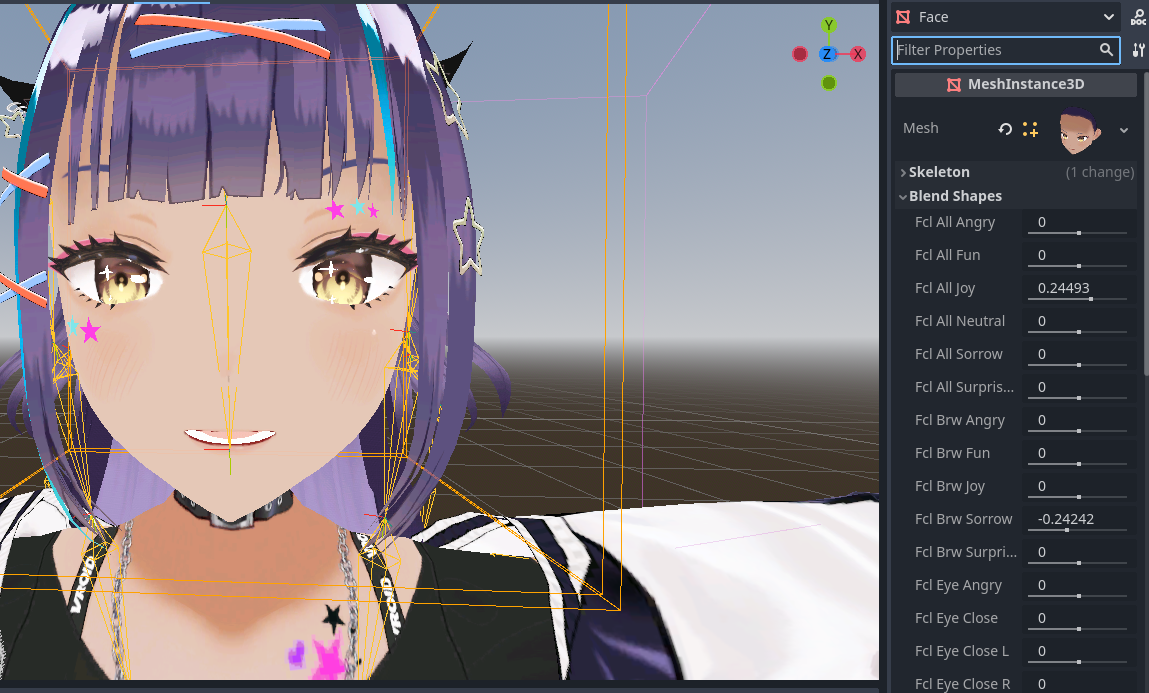

In the world of gaming, realistic human characters are essential, and compelling facial animations play a crucial role in creating a sense of realism. The field of realistic facial animation has made significant strides, with technologies like Apple ARKit and Google MediaPipe, which can map a real face onto 52 parameters, known as blend shapes.

Here's an example (NOT MINE) of how this technology can work (this tech is mainly used by vtubers):

A human face can be represented as a vector of blend shape values ranging from 0 to 1. Given that facial expressions are continuous, changes in a face over time can be depicted as a path in the 52-dimensional blend shape space. This space is relatively limited since an excessive number of blend shape value pairs can result in unnatural expressions.

My objectives are twofold:

- Lip sync from text: This task is achievable but requires substantial effort to construct the intensity of each phoneme over time, given the 1:1 correlation between phonemes and blend shapes.

- Procedural animations: This goal is challenging and requires extensive research.

My plan is to:

- Develop an emotion path dataset

- Use a periodic autoencoder to generate paths

- Record and blend multiple paths procedurally

I have already achieved the following:

- Transformed the blend shapes array into a real face in Godot using the blend shapes of the mesh instance.

- Created a Python tool to extract blend shapes from a video by processing each frame, following an unsuccessful attempt with VMC (due to unsatisfactory framerate handling).

Here's an example of a Vroid model with blend shapes in godot

The python extractor that transform a video into blend_shapes values over time

Please feel free to share any ideas on creating high-quality data, efficiently animating the MeshInstance3D, or any other useful insights.